Knowledge Library

Welcome to your go-to hub for all things platform engineering. Dive into expert insights with our blog, explore in-depth resources in our library, tune into inspiring conversations on our podcast, and watch engaging videos packed with valuable tech knowledge.

APIs are no longer isolated services, they are the connective tissue of modern applications, enabling seamless integration across platforms, microservices, and external ecosystems. Yet, as organizations scale their APIs, a...

1. Features & Customization Q: Can I tailor Cycloid to our specific workflows? A: Absolutely. Cycloid offers StackForms, customizable service catalog forms, plus Components, which allow modular configuration of individual...

What Are Day 2 Operations? To understand Day 2, you have to rewind a bit and look at the three phases that make up the full lifecycle of cloud infrastructure...

TL;DR MSPs often struggle with scattered cloud access, inconsistent provisioning, and lack of client isolation. A Cloud Management Portal (CMP) tailored for MSPs helps centralize infra control, enable team-specific access,...

Cloud Management Platforms (CMPs) abstract the underlying complexity of provisioning, managing, and securing infrastructure across cloud providers. A CMP can expose a unified Terraform catalog that provisions infrastructure in any...

“What is productivity in development? I mean, even with metrics like lead time, it might work against taking the time to do good things.” Julien ‘Seraf’ Syx, CTO & Product...

Every engineering team has seen it: a developer needs to spin up a new Kubernetes namespace to test a service in isolation. Or maybe deploy a temporary PostgreSQL database to...

The Internal Developer Platform - Bringing Unity to Platform Engineering

Learn what an Internal Developer Platform is, why you need one, and how they bring unity to platform engineering with this eBook.

Can Platform Engineering address data center energy shortages?

In this eBook, we dive into how platform engineering can play a pivotal role in tackling the energy crisis faced by data centers.

The Art of Platform Engineering

This ebook will clear up any confusion you may have between Platform Engineering, Internal and self-service portals.

The Definitive Guide to GreenOps

This ebook will be your roadmap to success in a world where environmental responsibility takes center stage.

Guardians of the Cloud: Volume 2

Part 2 of our comic book sees the start of an environmental rebellion and attempts to use cloud resources more efficiently.

In It For The Long Haul: Platform Engineering in the Age of Sustainability

Enable smarter, more environmentally conscious cloud consumption decisions at every level of a business, and more efficient processes for your teams.

Guardians of the Cloud: Volume 1

Welcome to Guardians of the Cloud, a brand-new comic book series that takes you on an unforgettable journey to Cloud City – a place of endless innovation that harbors a deep secret.

Life in the fast lane: DevOps and hybrid cloud mastery

In this ebook, we show you how to roll out DevOps and hybrid cloud at the same time, while taking as few risks as possible.

IAC Migration for forward-thinking businesses

Read how to alleviate some of your infra-related anxieties with a simple tool. Here are the answers to some of your most burning questions.

Infosheet: hybrid cloud - bring it to the devs

Are you a team leader, tasked with bringing hybrid cloud to your team? We’ve put together 5 practical, actionable tips to make the rollout easier and the DevX smoother

Insight: Businesses need to start thinking about that DevX Factor

We believe that you should empower your existing teams to reach their full DevOps potential. How to deliver that DevX factor – insight by Rob Gillam.

Insight: How do you solve a problem like Cloud Waste?

Without supervision, running cloud expenses will add up and cost you success. Read about Cycloid’s solution to cloud cost estimation and monitoring – insight by founder Benjamin Brial.

Infosheet: Infra as Code by Cycloid

We believe Infra as Code is the only approach to software development that lets you scale safely and successfully, so let us soothe your concerns and lead you confidently into the wonderful world of IaC with this infosheet.

Infosheet: Governance with Cycloid

We know, we know. Governance isn’t cool, but it is essential! Cycloid builds it in from the ground up, so your experts have all the control they need, without cramping the style of the rest of your team. This infosheet explains our approach and what tools you’ll have at your disposal.

Cheatsheet: DevOps business value - make the case to the c-suite

It’s not the message that needs to change, it’s the way you deliver it! We’ve created an infosheet with a new perspective on sharing DevOps and tech metrics with a non-tech audience.

Ebook: DevOps business value: prove it or lose it

We’ve written this ebook to help tech team leaders create better, more productive relationships with the executive team, even if you’ve really had problems communicating in the past.

Whitepaper: Get Your Team Ready for Increased Automation

This whitepaper consolidates the 3 ebooks that make up the hugely popular Plan Now, Win Later ebook series and will show you how to lead your tech team into a DevOps-first future!

Ebook: Build a culture of operational safety

DevOps will help you scale, but scaling is dangerous if you have no safeguards in place. This ebook shows you how to keep your SDLC safe, no matter what.

Ebook: Make tools and schedules work with your team

The grind – or smoothness – of their daily work is what’s going to make or break your team. Set them up for success from day one – we show you how in this ebook.

We talk to Wilco Burggraaf, Green IT lead at HighTech Innovators, to shine the spotlight on the world of Green Coding – a transformative approach that prioritizes energy efficiency.

We sat down with Sean Varley, Chief Evangelist and VP of Business Development at Ampere to discuss the intersection of AI, cryptocurrency, and sustainable technology.

Donal Daly, the visionary founder of Future Planet, joins us this time and takes us on a compelling exploration into the realm of ESG (Environmental, Social, and Governance) strategies.

We talk to Guillaume Thibaux, Founder & CTO of Quanta.io, shining the spotlight on his visionary company that has pioneered a free solution to measure the environmental impact of a website.

Coming soon!

Up Next!

Keep an eye on this page to view upcoming episodes.

Next Episode Launch date: February 2025

Product demos

Cycloid Platform Engineering Demo Video

Improve developer experience, empower end-users and operational efficiency to increase time-to-market speed with Cycloid.

Improve DevX with Cycloid Platform Engineering

Cycloid Platform Engineering uses a simple user-friendly self-service portal and service catalog to empower your end-users.

Feature demos

Calculate the cost of resources before you deploy - Cloud Cost Estimation Tool

Cloud Cost Estimation is integrated within our developer self-service portal Stackforms to help you make the best cost-optimized decisions before you deploy.

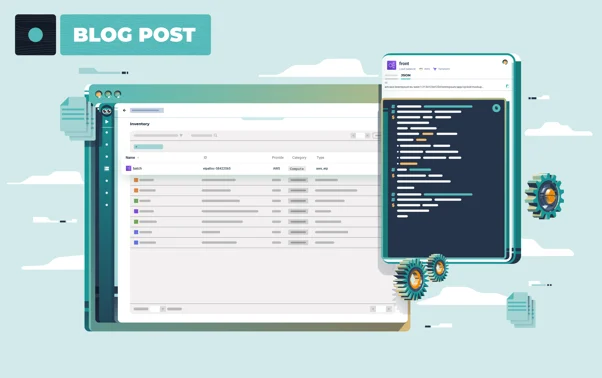

Simple service catalog - Stacks

Preconfigure user-friendly Stacks and allow your devs to choose approved and suitable infra configurations from a custom service catalog that’s made to measure.

Reverse Terraform tool: Infra Import 2024

Infra Import industrialises your manually deployed infrastructure at scale by automatically creating Terraform files and generating IaC. Modernize your infra and future-proof your business with Cycloid.