Knowledge Library

Welcome to your go-to hub for all things platform engineering. Dive into expert insights with our blog, explore in-depth resources in our library, tune into inspiring conversations on our podcast, and watch engaging videos packed with valuable tech knowledge.

What is Asset Inventory Management in the Cloud?

To understand asset inventory management in the cloud, first ask yourself: What is asset inventory? Traditionally, an inventory asset referred to physical items like servers, storage devices, or networking equipment in data centers. These assets had fixed locations, purchase dates, and clearly defined lifecycles. But cloud asset inventory changes this entirely. Today, inventory is considered an asset only if you have complete visibility into it. Resources are ephemeral—virtual machines, containers, databases, and networks can appear and vanish within minutes. Without proper asset inventory management, tracking these dynamic resources becomes nearly impossible. Here's a simplified overview of how inventory management typically works, especially relevant for cloud environments: As shown, effective asset inventory management involves a clear, repeatable cycle:- Identify Assets: Discover all existing cloud resources across multiple providers.

- Record Asset Details: Capture essential details such as resource type, location, ownership, and billing information.

- Track Asset Lifecycle: Monitor assets from provisioning through active usage to eventual retirement or deletion.

- Monitor Usage & Status: Regularly track resource utilization to avoid unnecessary costs or downtime.

- Perform Regular Audits: Periodically verify resources, ensuring accurate inventory and compliance.

- Update Records: Adjust inventory records based on audit results or infrastructure changes.

- Generate Reports & Insights: Provide actionable data on resource allocation, cost, and compliance.

- Optimize Inventory: Continuously refine resource allocation and lifecycle management for improved efficiency.

What are the Challenges within Multi-Cloud Inventory?

Now in multi-cloud environments, things get even more complicated. Each provider has its own way of handling inventory, permissions, and tracking. Without a well-defined strategy, visibility becomes fragmented, and operational overhead increases. Let’s take a look at some of the challenges that teams face when trying to track assets across multiple cloud providers.Different APIs & Data Formats

AWS, GCP, Azure, and other cloud providers like Outscale and IONOS each have their own APIs and services for structuring and accessing cloud resources. While resource data is generally formatted in widely used standards like JSON or CSV, the methods for retrieving it - via CLI tools, SDKs, or direct API calls - vary significantly across providers. For example, AWS provides resource visibility through AWS Config, GCP offers its Asset Inventory service, and Azure relies on Resource Graph for querying cloud resources. Similarly, European cloud providers like Outscale and IONOS have their own APIs and tools for resource management. Despite achieving similar goals, differences in APIs, authentication mechanisms, and command-line syntax mean organizations often require custom integrations or separate scripts to consolidate inventory data across multiple clouds. This adds complexity and overhead when creating a unified, centralized asset inventory view.Access & Permissions

IAM management is already complex within a single cloud provider, but managing roles, service accounts, and permissions across multiple clouds is an entirely different challenge. A role with overly permissive access in one cloud could create a security risk, while an untracked service account in another could become an attack vector. Ensuring consistent access policies across platforms is one of the hardest parts of multi-cloud asset management.Lack of a Single Source of Truth

In most organizations, asset data is scattered. Some teams rely on AWS Config, others use GCP Asset Inventory, and a few still maintain spreadsheets to track critical resources. When data is fragmented across multiple tools and platforms, no single dashboard provides a complete picture of what exists in the cloud, making audits and compliance checks a nightmare.Scaling Inventory Processes

What works for a small environment with 50 resources quickly falls apart when you’re managing 5,000+ resources across multiple accounts and regions. Manual tracking isn’t scalable, and without automation for tagging, reporting, and asset discovery, the process becomes unmanageable. Without proper guardrails, resources get lost, permissions drift, and costs spiral out of control. To overcome these challenges, teams need a more structured, automated approach to tracking cloud assets - one that works across providers and scales with infrastructure growth.What Are the Core Approaches to Track Asset Inventory Across Clouds?

Now, let’s go through some key methods that organizations use to maintain a reliable and up-to-date asset inventory.Native Cloud Services

Each cloud provider offers built-in tools for asset tracking. While they don’t work across platforms, they provide a great visibility into resources within their own ecosystem.- AWS Config – Tracks AWS resource changes automatically and records configurations over time.

- AWS Resource Explorer – Helps search for AWS resources across accounts and regions, making it easier to find orphaned or untagged assets.

- AWS Systems Manager (SSM) Inventory – Collects metadata about EC2 instances and applications, providing insight into running workloads.

- GCP Asset Inventory – Provides a real-time view of all GCP resources, including IAM roles and permissions.

- Azure Resource Graph – Allows for large-scale queries across Azure subscriptions to track deployed resources.

IaC State Files

Infrastructure as Code (IaC) offers a provider-agnostic way to manage cloud infrastructure, making it one of the most effective strategies for multi-cloud asset tracking.- Terraform State: Terraform maintains an internal state file (terraform.tfstate) that acts as an authoritative inventory of all provisioned cloud resources. Every time Terraform provisions or modifies infrastructure, this state file is updated. You can directly query Terraform state to list all managed resources by running commands such as terraform state list.

- Pulumi State – Similar to Terraform, Pulumi stores infrastructure state in a backend that records all provisioned resources across multiple clouds. This state file acts as Pulumi's inventory source. You can query your infrastructure inventory with pulumi stack export command. This command exports state as JSON, allowing you to extract resource inventory information clearly.

Hands-On: Automating Asset Inventory with Terraform & AWS

Now, instead of relying on engineers to track cloud assets manually, we'll automate asset inventory management using AWS Config. AWS Config serves as a built-in inventory management solution for AWS by continuously recording and maintaining a detailed list of all resources in your account. Each resource created, modified, or deleted is logged in real-time, providing a comprehensive, historical inventory stored securely in an S3 bucket. Let’s go through each step one by one.Step 1: Set Up AWS Config to Track All Resources

AWS Config is a service that keeps track of every AWS resource and logs any changes. It stores these records in S3, so you can go back and see what was created, deleted, or modified at any time. First, we need an S3 bucket to store AWS Config logs. Since every S3 bucket name must be unique, Terraform adds a random suffix to ensure no naming conflicts.| provider "aws" { region = "us-east-1" } resource "random_pet" "s3_suffix" { length = 2 separator = "-" } resource "aws_s3_bucket" "config_logs" { bucket = "cyc-infra-bucket-${random_pet.s3_suffix.id}-prod" force_destroy = true } Next, we create an IAM role that allows AWS Config to write logs to this S3 bucket. resource "aws_iam_role" "config_role" { name = "config-role-prod" assume_role_policy = jsonencode({ Version = "2012-10-17" Statement = [{ Effect = "Allow" Principal = { Service = "config.amazonaws.com" } Action = "sts:AssumeRole" }] }) } resource "aws_iam_role_policy_attachment" "config_s3_attach" { policy_arn = "arn:aws:iam::aws:policy/service-role/AWS_ConfigRole" role = aws_iam_role.config_role.name } Now, we enable AWS Config and tell it to track all AWS resources. resource "aws_config_configuration_recorder" "config_recorder" { name = "config-recorder-prod" role_arn = aws_iam_role.config_role.arn } resource "aws_config_delivery_channel" "config_delivery" { name = "config-channel-prod" s3_bucket_name = aws_s3_bucket.config_logs.id } resource "aws_config_configuration_recorder_status" "config_recorder_status" { name = aws_config_configuration_recorder.config_recorder.name is_enabled = true depends_on = [aws_config_delivery_channel.config_delivery] } |

| aws configservice describe-configuration-recorders |

Step 2: Enable AWS Resource Explorer

When you’re managing multiple AWS accounts, finding resources is a pain. AWS Resource Explorer makes it easier by allowing you to search for instances, databases, and other resources across all AWS accounts and regions. We enable AWS Resource Explorer using Terraform by creating an aggregated index that gathers data from all AWS accounts.| resource "aws_resourceexplorer2_index" "resource_explorer_index" { name = "resource-index-prod" type = "AGGREGATOR" } resource "aws_resourceexplorer2_view" "resource_explorer_view" { name = "resource-view-prod" scope = "ALL" } |

| aws resource-explorer-2 list-views |

Step 3: Automate Tag Enforcement with AWS Lambda & EventBridge

Many teams struggle with inconsistent resource tagging. Some engineers follow tagging rules, others forget, and some resources end up with no tags at all. Missing tags make it hard to track costs, enforce security, and find resources. To fix this, we create a Lambda function that automatically tags resources when they are created.| resource "aws_lambda_function" "tag_enforcer" { function_name = "tag-enforcer-prod" filename = "lambda.zip" source_code_hash = filebase64sha256("lambda.zip") handler = "index.handler" runtime = "python3.9" role = aws_iam_role.lambda_role.arn } |

| resource "aws_cloudwatch_event_rule" "resource_creation_rule" { name = "resource-creation-prod" description = "Triggers on new resource creation" event_pattern = jsonencode({ source = ["aws.ec2", "aws.s3", "aws.lambda"] detail-type = ["AWS API Call via CloudTrail"] detail = { eventSource = ["ec2.amazonaws.com", "s3.amazonaws.com", "lambda.amazonaws.com"] eventName = ["RunInstances", "CreateBucket", "CreateFunction"] } }) } |

| aws lambda list-functions | grep "tag-enforcer" |

Step 4: Use AWS Organizations for Multi-Account Management

If you’re managing multiple AWS accounts, keeping track of resources across accounts is a nightmare. AWS Organizations makes this easier by bringing all accounts under one umbrella, ensuring that they follow the same security and tagging rules. Terraform enables AWS Organizations to enforce account-wide policies.| resource "aws_organizations_organization" "org" { feature_set = "ALL" } |

| aws organizations describe-organization |

Step 5: Querying AWS Config for Tracked Resources

Now that AWS Config is actively tracking all resources in your AWS environment, the next step is to retrieve asset inventory reports. This helps teams understand what resources exist, their configuration history, and who owns them. To fetch all tracked AWS resources, use:| aws configservice list-discovered-resources --resource-type AWS::AllSupported --max-items 50 |

Step 6: Retrieve AWS Resource Configuration History

Now, tracking inventory changes over time is important for troubleshooting, security audits, and compliance tracking. To check the configuration history of an EC2 instance:| aws configservice get-resource-config-history \ --resource-type AWS::EC2::Instance \ --resource-id i-0a9b3f25c7d891e3f \ --limit 5 |

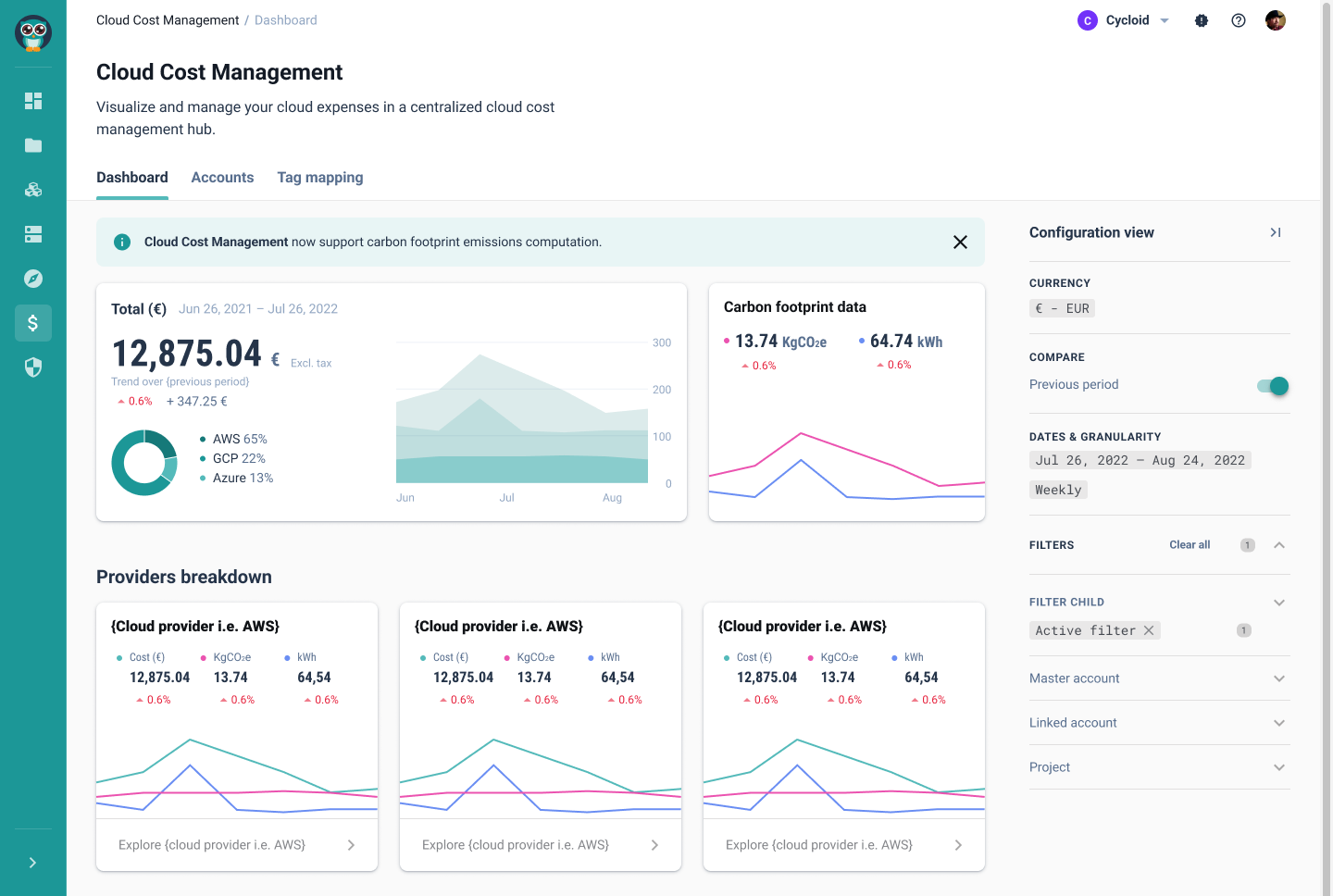

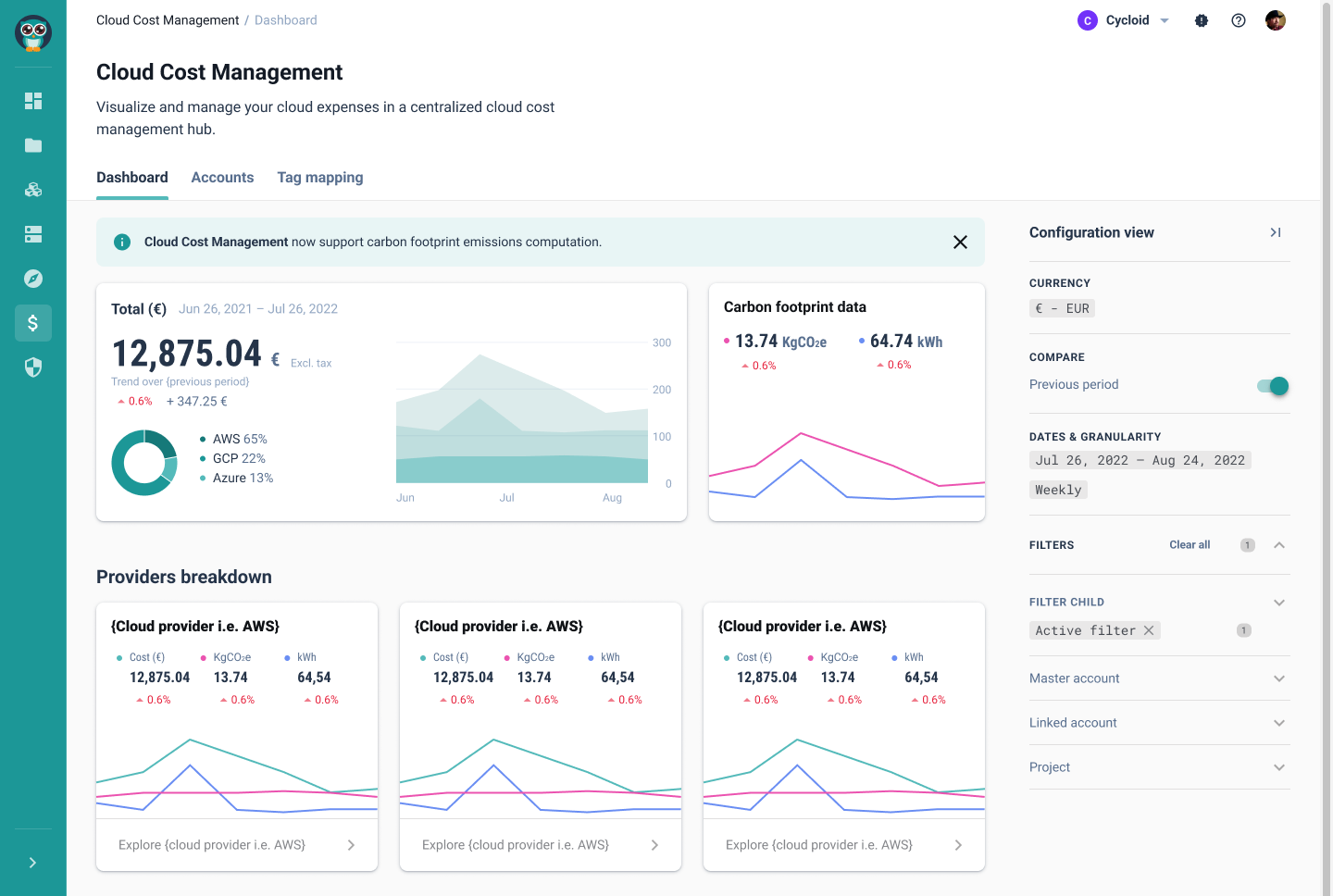

How Cycloid Handles Cloud Asset Management Across Multiple Clouds

This is where Cycloid steps in. Cycloid provides a unified asset inventory that integrates easily across multiple cloud providers. Instead of managing infrastructure separately for each cloud, Cycloid brings everything into one place, making it easier to track, standardize, and govern cloud assets. Teams managing infrastructure across multiple clouds often struggle with fragmented visibility. A resource might exist in AWS but have dependencies in GCP, and a networking component might reside in Azure. Without a single source of truth, DevOps teams end up switching between different consoles just to track their infrastructure. Cycloid eliminates this complexity by offering a centralized dashboard that consolidates all cloud assets in one place.- Access their favorite projects.

- Track cloud costs across multiple cloud providers.

- Monitor carbon emissions of infrastructure.

- Check recent activity logs, consolidating events across AWS, GCP, and Azure.

- Single, unified view: Provides visibility of all cloud assets, eliminating the need to log in to multiple cloud provider platforms.

- Real-time tracking: Offers centralized tracking of resource usage, ownership, and configuration changes.

- Projects: Top-level units grouping resources across AWS, GCP, and Azure.

- Environments: Multiple isolated environments within each project, such as development, staging, or production.

- Components: Individual resources or services like virtual machines, Kubernetes clusters, databases, or networking resources deployed within environments.

- Centralized inventory: Projects and environments automatically maintain accurate resource tracking.

- Lifecycle management: Consistently track, audit, and manage infrastructure inventory across multiple clouds.

- Fetch real-time cloud inventory data across AWS, GCP, and Azure without logging into multiple dashboards.

- Automate infrastructure reporting, making sure all cloud assets are properly tagged, allocated, and compliant.

- Integrate with existing DevOps pipelines, so cloud resource changes trigger workflows in tools like Terraform, CI/CD platforms, or monitoring solutions.

Conclusion

With a structured approach to cloud asset management, tracking resources across hybrid and multi-cloud environments becomes seamless. Automating inventory management ensures visibility, security, and cost control, eliminating manual inefficiencies. By now, you should have a clear understanding of how to manage cloud assets effectively, keeping your infrastructure organized, compliant, and scalable.Frequently Asked Questions

What is a Hybrid and Multi-cloud Approach?? A hybrid cloud combines on-premise infrastructure with public or private cloud services, while a multi-cloud approach uses multiple cloud providers (AWS, Azure, GCP) to avoid vendor lock-in and improve resilience. What is the Role of a Cloud Asset Inventory? A cloud asset inventory provides visibility, tracking, and governance over all cloud resources, helping teams monitor usage, enforce security policies, and optimize costs. What is the Best Way to Record Inventory? Automating asset tracking using cloud-native tools (AWS Config, GCP Asset Inventory), Infrastructure as Code (Terraform state files), and centralized dashboards ensures accuracy and real-time updates....What is Cloud Governance?

Cloud governance is the set of rules and controls that organizations use to manage cloud resources efficiently. These rules ensure that every resource follows security, compliance, and operational standards. Without governance, AWS environments can quickly become disorganized, and non-compliant.Risks of Poor Cloud Governance

Without a governance framework, misconfigurations and security gaps can easily go unnoticed by your teammates. Some common risks include:- Overly Permissive IAM Policies – Users and services may have more access than necessary, increasing security risks.

- Uncycd Storage – Publicly accessible S3 buckets or unencrypted EBS volumes may expose sensitive data.

- Weak Network Security – Poorly configured security groups and VPC rules can expose cloud resources to the internet.

- Compliance Failures – Without continuous monitoring, resources may drift away from industry standards like CIS, NIST, PCI-DSS, and ISO 27001.

The Role of Compliance Audits in Enforcing Governance Standards

A compliance audit is a structured process to check if cloud environments follow security and regulatory policies. These audits help organizations:- Detect Misconfigurations Early – Regular checks help identify security risks before they are exploited.

- Ensure Compliance with Industry Standards – Frameworks like SOC 2, HIPAA, and GDPR require strict security policies. Compliance audits verify adherence.

- Prevent Configuration Drift – Infrastructure may change over time. Audits ensure that cloud resources stay aligned with security baselines defined by the organization.

Defining Cloud Governance Policies with Terraform

Cloud environments require well-defined governance policies to maintain security, enforce compliance, and prevent misconfigurations. Without strict policy enforcement, AWS environments can become fragmented, introducing security risks and operational inconsistencies. Terraform provides a way to codify governance policies, ensuring they are consistently applied across all infrastructure deployments. Governance policies in AWS typically focus on identity and access management (IAM), storage security, and network security. Each of these domains plays a crucial role in enforcing security best practices. Let’s examine how Terraform helps implement and enforce these governance policies at scale.IAM Policies: Enforcing Least Privilege Access

IAM is the foundation of cloud security, controlling who can access resources and what actions they can perform. Over-permissioned IAM policies are a major security risk, often leading to privilege escalation and unauthorized data access. Enforcing the principle of least privilege (PoLP) ensures that identities only have the minimal permissions required to function. Terraform allows organizations to define IAM policies programmatically, ensuring uniform enforcement across environments. A common security requirement is restricting access to S3 buckets by assigning read-only permissions to a specific IAM role.| Effect = "Allow" Action = ["s3:GetObject"] Resource = "arn:aws:s3:::my-cyc-bucket/*" |

Storage Governance: Securing S3 and Enforcing Encryption

Misconfigured storage is one of the leading causes of data breaches in the cloud. Publicly accessible S3 buckets, unencrypted storage, and missing logging mechanisms can expose sensitive information. Terraform allows organizations to enforce storage security policies at the infrastructure level. S3 bucket policies can be configured to block public access, ensuring that no accidental exposure occurs. Encryption can also be enforced to protect data at rest, ensuring compliance with CIS, PCI-DSS, and NIST standards.| block_public_acls = true block_public_policy = true restrict_public_buckets = true |

Network Governance: Restricting Traffic and Enforcing VPC Security

Unrestricted network access poses a significant security threat in AWS environments. Misconfigured security groups, network ACLs (NACLs), and VPC peering rules can expose resources to the public internet, increasing the attack surface. Terraform enables teams to define security group rules as part of infrastructure deployments, ensuring that only authorized traffic flows into cloud workloads. If an organization wants to allow SSH access only from a trusted IP address, this can be enforced at deployment.| from_port = 22 to_port = 22 protocol = "tcp" cidr_blocks = ["203.0.113.0/32"] |

Scaling Governance Policies with Terraform

Manually managing security policies across multiple AWS accounts and environments introduces inconsistency and risk. Terraform provides a way to standardize governance policies, ensuring that security configurations remain declarative, version-controlled, and reproducible. By integrating Terraform with CI/CD pipelines, organizations can enforce security policies before deployment, preventing misconfigurations from ever reaching production. Terraform can also be used with AWS Config to detect configuration drift, alerting teams when resources deviate from the approved governance baseline. Effective governance requires continuous enforcement, real-time monitoring, and automated remediation. By using Terraform to manage IAM, storage, and network policies, organizations can proactively cyc their AWS environments, enforce compliance at scale, and eliminate any kind of security gaps within the infra.Automating Compliance Audits Using Terraform

Manually auditing cloud environments for compliance is inefficient, prone to errors, and difficult to scale. Security teams often rely on periodic reviews to identify misconfigurations, but by the time these audits are completed, the infrastructure may have already drifted away from compliance standards. Terraform addresses this challenge by automating compliance audits, ensuring that governance policies are continuously enforced and deviations are detected early. Compliance frameworks like CIS, NIST, and PCI-DSS provide security benchmarks that organizations must follow to cyc their cloud environments. Terraform enables teams to define these governance policies as code and validate infrastructure configurations against them. By integrating Terraform with AWS services like AWS Config, organizations can automatically detect and remediate non-compliant resources, reducing the risk of security incidents.Defining Compliance Checks in Terraform

The first step in automating audits is defining compliance baselines in Terraform. These baselines outline the required configurations for cloud resources, ensuring that security policies are applied consistently across deployments. For example, a compliance rule might state that all S3 buckets must be encrypted and block public access by default. Terraform can enforce this by defining security policies at the infrastructure level.| block_public_acls = true block_public_policy = true restrict_public_buckets = true |

Terraform’s Declarative Approach to Governance Audits

Terraform follows a declarative model, meaning infrastructure is defined in code and any deviations from the expected state are flagged. This makes it easier to enforce governance policies, as Terraform continuously compares the actual infrastructure state with the desired configuration. With the Terraform plan, teams can preview infrastructure changes before applying them. If a change violates compliance policies - such as enabling public access on an S3 bucket - Terraform will flag it, preventing accidental misconfigurations. This approach acts as an automated security check, ensuring that governance rules are followed throughout the infrastructure lifecycle.Integrating AWS Config with Terraform for Continuous Monitoring

While Terraform helps enforce governance at deployment, AWS Config ensures that compliance is maintained over time. AWS Config continuously monitors AWS resources and detects any drift from security baselines. When integrated with Terraform, AWS Config can automatically trigger alerts or remediation actions when a resource becomes non-compliant. For example, if an S3 bucket’s encryption setting is disabled, AWS Config can detect the change, and Terraform can be used to automatically reapply the correct security settings.| resource "aws_config_config_rule" "s3_encryption_check" { name = "s3-encryption-check" source { owner = "AWS" source_identifier = "S3_BUCKET_SERVER_SIDE_ENCRYPTION_ENABLED" } } |

Generating Governance Compliance Reports with Terraform

Governance audits often require detailed reporting to demonstrate compliance with regulatory frameworks. Terraform outputs can be used to generate compliance reports, providing visibility into security controls and infrastructure state. Terraform can output a list of non-compliant resources, allowing security teams to track violations and remediate them efficiently.| output "non_compliant_resources" { value = aws_config_config_rule.s3_encryption_check.arn } |

Proactive Compliance Auditing with Terraform

By automating compliance checks with Terraform, organizations move from a reactive to a proactive approach in cloud governance. Instead of waiting for audits to uncover security gaps, Terraform ensures that compliance policies are enforced before deployment and continuously monitored after deployment. With a combination of policy-as-code, AWS Config, and automated reporting, Terraform enables organizations to maintain a cyc, compliant, and well-governed AWS environment at scale.Hands-On: Implementing Cloud Governance with Terraform

Governance policies ensure that cloud environments remain cyc, compliant, and well-structured. However, defining policies alone is not enough - these rules must be enforced at every stage of infrastructure deployment. Terraform enables organizations to implement governance controls as code, ensuring that security policies are applied consistently across all AWS environments. This section focuses on setting up Terraform for governance enforcement, applying IAM policies to restrict access, securing S3 storage, enforcing network security rules, integrating AWS Config for compliance monitoring, and generating governance audit reports. By the end of this implementation, Terraform will automate governance policies, detect any deviation from security baselines, and ensure that AWS resources remain compliant.Setting Up Terraform for Governance Enforcement

Before applying governance policies, Terraform needs to be configured to interact with AWS. The first step is to verify AWS authentication by running:| aws sts get-caller-identity |

Enforcing IAM Policies with Terraform

Identity and Access Management (IAM) controls who can access cloud resources and what actions they can perform. Overly permissive IAM policies increase security risks, making it crucial to follow the principle of least privilege. Terraform allows IAM policies to be defined as code, ensuring uniform enforcement across environments. To restrict access to an S3 bucket, a policy can be defined that allows only read-only permissions. This prevents unauthorized modifications while ensuring data accessibility.| resource "aws_iam_policy" "s3_read_only" { name = "S3ReadOnlyAccess" description = "Provides read-only access to S3"policy = jsonencode({ Version = "2012-10-17", Statement = [ { Effect = "Allow", Action = ["s3:GetObject"], Resource = "arn:aws:s3:::prod-data-cyc/*" } ] }) } |

| aws iam list-policies --query "Policies[?PolicyName=='S3ReadOnlyAccess']" |

Securing S3 Buckets and Enforcing Encryption

Publicly accessible S3 buckets and unencrypted storage create vulnerabilities that can lead to data breaches. Terraform ensures that all S3 buckets are encrypted and block public access by default. A governance-compliant bucket configuration includes encryption enforcement and access restrictions.| resource "aws_s3_bucket" "cyc_bucket" { bucket = "org-finance-data" }resource "aws_s3_bucket_server_side_encryption_configuration" "cyc_bucket_encryption" { bucket = aws_s3_bucket.cyc_bucket.id rule { apply_server_side_encryption_by_default { sse_algorithm = "AES256" } } } resource "aws_s3_bucket_public_access_block" "bucket_block" { bucket = aws_s3_bucket.cyc_bucket.id block_public_acls = true block_public_policy = true restrict_public_buckets = true } |

| aws s3api get-public-access-block --bucket org-finance-data |

Enforcing Network Security with Terraform

Security groups play a crucial role in restricting network access. Misconfigured security groups can expose services to the public internet, making them vulnerable to unauthorized access. To enforce network security, Terraform can define strict inbound and outbound rules. For SSH access, the following configuration allows connections only from a specific trusted IP:| resource "aws_security_group" "restricted_sg" { name = "restricted_ssh" description = "Allows SSH access only from trusted IP" vpc_id = aws_vpc.main.idingress { from_port = 22 to_port = 22 protocol = "tcp" cidr_blocks = ["192.168.1.100/32"] } egress { from_port = 0 to_port = 0 protocol = "-1" cidr_blocks = ["0.0.0.0/0"] } } |

| aws ec2 describe-security-groups --group-ids sg-0123abcd5678efgh9 |

Integrating AWS Config for Governance Monitoring

AWS Config helps detect configuration drift and ensures that resources remain compliant with governance rules. Terraform can define a compliance rule to check if all S3 buckets are encrypted.| resource "aws_config_config_rule" "s3_encryption_check" { name = "s3-encryption-check" source { owner = "AWS" source_identifier = "S3_BUCKET_SERVER_SIDE_ENCRYPTION_ENABLED" } } |

| aws configservice describe-compliance-by-config-rule --config-rule-name s3-encryption-check |

Best Practices for Continuous Cloud Governance

Implementing governance policies with Terraform ensures a cyc cloud environment, but following best practices strengthens compliance, reduces misconfigurations, and prevents security drift.Version-Control Governance Policies

Storing Terraform configurations in Git allows teams to track changes, enforce approvals, and maintain an audit trail. Using branching strategies, such as GitFlow, prevents unauthorized modifications to governance rules.Secure Terraform State Files

Terraform state files contain sensitive information, including IAM roles, database credentials, and networking details. Enabling encryption for state files and using remote storage solutions like AWS S3 with state locking in DynamoDB makes sure that state files are not tampered with or accessed unintentionally.Conduct Regular Compliance Audits

Infrastructure changes, whether intentional or accidental, can introduce misconfigurations. Automating compliance checks using AWS Config and Terraform plan ensures that deviations from governance baselines are identified and corrected early.Integrate Terraform into CI/CD Pipelines

Running terraform validate and terraform plan as part of the pipeline ensures that changes adhere to security policies before reaching production. Combining Terraform with policy-as-code tools like Open Policy Agent (OPA) further strengthens governance by enforcing predefined rules within pipelines. Making sure that every infrastructure change adheres to governance policies is often difficult. Many security teams rely on manual checks, pre-deployment security reviews, and post-deployment audits to enforce compliance. While these methods can identify misconfigurations, they introduce delays and inconsistencies. A common challenge in governance enforcement is the lack of real-time validation. Organizations often detect non-compliant configurations only after resources are already deployed, leading to costly rollbacks and security risks. Without a centralized mechanism to enforce security, IAM, and networking policies before deployment, teams struggle with operational inefficiencies and increased exposure to misconfigurations. One approach to address this challenge is to integrate compliance checks into Terraform workflows. Security teams write custom validation scripts that run during the Terraform execution process, scanning configurations for policy violations. While this method improves governance, it introduces complexity. These scripts must be maintained, updated as security policies evolve, and integrated into CI/CD pipelines. Additionally, enforcement varies across teams, as different engineers may interpret policies differently.Enforcing Cloud Governance with Cycloid Infra Policies

Cycloid’s Infra Policies simplify governance enforcement by providing a centralized, automated approach to policy validation. Rather than relying on custom scripts, like running OPA commands repeatedly or waiting for team members to review your configurations, Cycloid allows DevOps teams to define governance rules as code. These policies are then automatically enforced during the Terraform plan execution, preventing misconfigurations before they make it to production. The first step in implementing governance policies with Cycloid is to create an Infra Policy in the Cycloid console. Navigate to Security -> InfraPolicies, where teams can define policy rules to enforce compliance standards.| - name: cycloid-resource type: registry-image source: repository: cycloid/cycloid-resource tag: latest |

| - name: infrapolicy type: cycloid-resource source: feature: infrapolicy api_key: ((cycloid_api_key)) api_url: ((cycloid_api_url)) env: ((env)) org: ((customer)) project: ((project)) |

| - put: infrapolicy params: tfplan_path: tfstate/plan.json |

Conclusion

Now, you should have a clear understanding of how Terraform automates governance, enforces compliance, and prevents misconfigurations. Integrating policy checks early ensures cyc, auditable, and compliant cloud environments.Frequently Asked Questions

1. Which AWS Cloud Service Enables Governance Compliance, Operational Auditing, and Risk Auditing of Your AWS Account?

AWS CloudTrail is the primary service that enables governance, compliance, operational auditing, and risk auditing of AWS accounts. It continuously records AWS API calls, providing visibility into user activity across AWS services. CloudTrail logs include details such as the identity of the caller, the time of the API call, the source IP address, and the request parameters. These logs help monitor changes, detect unusual activities, and ensure compliance with internal security policies and external regulations like GDPR, HIPAA, and SOC 2.2. Which AWS Service Continuously Audits AWS Resources and Enables Them to Assess Overall Compliance?

AWS Config is a managed service that continuously audits and assesses the configuration of AWS resources to ensure they comply with security best practices and governance policies. It tracks configuration changes in resources such as EC2 instances, security groups, IAM roles, and S3 buckets, maintaining a historical record of configurations.

Organizations use AWS Config to:

- Assess compliance with industry standards such as PCI-DSS, NIST, ISO 27001, and CIS benchmarks.

- Detect misconfigurations and remediate them using AWS Config Rules and AWS Systems Manager.

- Monitor resource relationships and dependencies for better visibility into infrastructure changes.

3. Which AWS Service Supports Governance, Compliance, and Risk Auditing of AWS Accounts?

AWS Audit Manager is a fully managed service that simplifies compliance assessments and risk auditing by automating the collection of evidence across AWS resources.

The key capabilities of AWS Audit Manager include:

- Automated compliance reporting for frameworks such as SOC 2, ISO 27001, PCI-DSS, GDPR, and HIPAA.

- Continuous evidence collection to track user activity, resource changes, and security configurations.

- Customizable assessment frameworks to align with internal governance policies.

Organizations use Audit Manager to simplify compliance processes, reduce manual effort in audits, and ensure that security controls are continuously monitored and assessed.

4. What Are Governance, Risk, and Compliance in Cloud Computing?

Governance, Risk, and Compliance (GRC) in cloud computing refers to a structured framework that helps organizations:

- Governance: Define policies and enforce best practices to ensure secure and efficient use of cloud resources. This includes identity and access management, cost control, and operational policies.

- Risk Management: Identify, assess, and mitigate security risks, misconfigurations, and potential breaches by implementing proactive security controls.

- Compliance: Ensure that cloud infrastructure adheres to regulatory requirements such as GDPR, HIPAA, SOC 2, and ISO 27001, and that cloud workloads meet industry standards.

AWS provides several GRC tools, including AWS Organizations, AWS Control Tower, AWS Security Hub, AWS CloudTrail, and AWS Audit Manager, to help enterprises implement a robust GRC strategy.

5. What is the Cloud Governance Structure in Cloud Service Management?

Cloud governance structure refers to the policies, roles, responsibilities, and best practices that define how cloud environments are managed and secured. It ensures that an organization maintains control over cloud resources while staying compliant with industry regulations.

A strong cloud governance structure includes:

- Identity and Access Management (IAM): Defining roles, permissions, and least-privilege access policies.

- Security Policies and Compliance Frameworks: Enforcing standards like CIS, NIST, PCI-DSS, and automated compliance monitoring.

- Resource Management and Cost Control: Implementing budget controls, cost allocation tags, and reserved capacity planning.

- Continuous Monitoring and Auditing: Using services like AWS Security Hub, AWS Config, CloudTrail, and GuardDuty to detect anomalies and maintain security posture.

- Discoverability: Developers often struggle to find existing software or services, identify their owners, and access relevant documentation. This leads to duplicated efforts and wasted time, especially in large engineering teams working across multiple applications. IDPs solve this by providing a centralized service catalog where backend, frontend, and platform engineering teams can access all infrastructure components, environments, and tools in one place.

- Self-Service: Without an IDP, developers often need to wait for DevOps teams to manually provision resources, leading to bottlenecks and delays in development cycles. IDPs introduce self-service capabilities by providing pre-configured infrastructure templates and automated workflows. For instance, instead of submitting a request for a Kubernetes cluster and waiting for DevOps to set it up, a developer can select a pre-approved cluster template and deploy it instantly through a self-service portal. This not only accelerates development but also ensures that infrastructure follows security and compliance policies without requiring manual intervention.

- Simplified Developer Experience: Managing deployments, tracking logs, and configuring services often requires developers to switch between multiple tools, leading to inefficiencies and context switching. IDPs centralize these functions into a single interface where developers can monitor deployments, manage configurations, and troubleshoot issues without needing to access multiple cloud dashboards.

What is an Internal Developer Platform?

Now that we know managing infrastructure manually slows down software development and adds extra work for DevOps teams, the next step is understanding how Internal Developer Platforms solve this problem. Instead of developers spending time provisioning compute instances, setting up networking, or fixing failed deployments, an IDP automates these tasks and provides a structured way to deploy applications.Key Features of an IDP

Now, an IDP simplifies infrastructure management by automating provisioning, enforcing security policies, and integrating with DevOps workflows. Unlike a CI/CD pipeline that focuses only on code deployment, an IDP handles infrastructure setup, access control, and observability, making sure it is a simple development and operations process.Infrastructure as Code for Automation

Manages infrastructure through code-based templates, eliminating manual setup and ensuring consistency.- IDPs integrate with Terraform, OpenTofu, Ansible, Helm, and eventually Pulumi to automate resource provisioning. With Kubernetes as a core component, test environments can be deployed using Helm, streamlining application deployments in a structured and repeatable manner.

- Infrastructure is defined as code, ensuring consistency across deployments.

- Developers select pre-configured templates instead of writing infrastructure code from scratch.

- Version control ensures that changes can be tracked, rolled back, and audited.

CI/CD Pipelines for Deployment

Automates application builds, testing, and deployments, reducing manual work and errors.- IDPs integrate with CI/CD tools through APIs, webhooks, and plugins. They trigger pipeline executions, enforce policies, and manage infrastructure provisioning automatically.

- Code changes trigger automated build, test, and deployment pipelines.

- Developers don’t need to configure CI/CD workflows - they use pre-built templates that ensure best practices.

Role-Based Access Control for Security

Controls who can modify infrastructure and deploy applications, enforcing strict access policies.- Only authorized users can modify infrastructure or trigger deployments.

- Permissions can be set at different levels - project, service, or environment-based access.

- Security policies ensure compliance with organizational standards and industry regulations.

Why Are More Organizations Using IDPs?

Managing your cloud infrastructure isn’t as simple as spinning up an EC2 instance or deploying an application. As organizations scale, their infrastructure and environment management become more complex - multiple cloud providers, containerized applications, strict security policies, and interconnected services. Developers need fast access to resources, but setting up environments manually slows them down and increases the chances of misconfigurations. Internal Developer Platforms solve these challenges by automating infrastructure tasks and enforcing standardized workflows across the development ecosystem. Here’s why more organizations are adopting IDPs:Faster Development Cycles

Provisioning infrastructure manually can take hours or even days, depending on approval workflows and configuration steps. IDPs speed up the process by giving developers access to pre-approved infrastructure setups.- Developers can provision virtual machines, databases, and networking resources instantly instead of waiting for DevOps approvals.

- Automated CI/CD pipelines ensure applications are tested and deployed consistently.

- Pre-configured templates remove inconsistencies, making sure every environment is deployed the same way.

Lower Operational Costs

Manually handling infrastructure requests puts a heavy load on DevOps teams, increasing operational costs. IDPs automate infrastructure provisioning, allowing developers to self-serve resources without relying on any manual intervention.- Developers can deploy applications without waiting for DevOps to set up environments, reducing downtime.

- IDPs offer cost estimation tools, helping teams see expected cloud expenses before deploying resources.

- By automating infrastructure management, companies reduce the need for large DevOps teams, optimizing operational costs.

Real-World Impact of IDPs

Organizations that integrate IDPs see measurable improvements in efficiency, security and compliance standards, and cost savings.- 85% of organizations agree that investing in IDPs and improving Developer Experience (DevEx) directly contributes to revenue growth.

- 77% of companies report a significant reduction in time-to-market due to centralized tooling, standardized workflows, and automated deployments.

- IDPs help reduce redundant infrastructure tasks, allowing engineering teams to focus on high-value development instead of manual provisioning and troubleshooting.

How Different IDPs Work

There are several internal developer platform examples in the industry, each designed to simplify your infrastructure management and application deployment. Not all Internal Developer Platforms work the same way. While they share the same goal - automating infrastructure provisioning, enforcing security policies, and simplifying deployments, their approaches vary. Some IDPs focus on stack-based deployments, where platform teams define reusable infrastructure components, while others offer UI-driven infrastructure automation, providing a visual interface for developers to configure resources without writing Terraform. Other platforms take an API-first approach, integrating directly into existing DevOps workflows.Stack-based Deployments

One approach to IDPs is stack-based deployments, where infrastructure configurations are standardized and reused across multiple teams. Cycloid follows this model by allowing platform teams to define stacks for cloud services such as AWS EC2, RDS, and S3. Developers can then deploy these pre-configured stacks without worrying about networking, security groups, or storage configurations. To provide a visual representation of how Cycloid enables stack-based infrastructure management, the diagram below shows how predefined infrastructure stacks, reusable templates, and automated deployment workflows streamline infrastructure provisioning while ensuring governance, cost estimation, and policy enforcement.- Pre-defined IaC Modules – Platform teams create reusable infrastructure modules using Terraform, OpenTofu, Ansible, Helm, or Pulumi, defining compute, networking, and storage configurations.

- Service Catalog & Parameterized Deployment – Developers select a predefined stack (e.g., AWS EC2 + RDS, Kubernetes cluster) from the IDP catalog, customizing parameters like instance type, VPC, and IAM roles.

- Automated Provisioning via Workflow Orchestration – The IDP invokes Terraform or Pulumi execution through an automation engine (e.g., ArgoCD, Jenkins, or a custom workflow runner), applying infrastructure changes without manual intervention.

- Built-in Policy & Security Enforcement – The IDP integrates with IAM, OPA, or custom rule engines to validate configurations, enforce RBAC, restrict unapproved changes, and ensure compliance with security standards before provisioning.

UI-driven Infrastructure Automation

Another approach is UI-driven infrastructure automation, which simplifies the provisioning process by allowing developers to select infrastructure components through an interactive interface. Instead of writing Terraform scripts or navigating complex Kubernetes configurations, developers can deploy resources with a few clicks. This model integrates with API-based automation, self-service interfaces, and cloud provisioning tools:- Visual Control Plane – Cycloid provides a web interface where users can configure cloud resources through an interactive UI instead of manually writing Infrastructure as Code configurations.

- Service Catalog & API Calls – Behind the UI, pre-configured infrastructure templates (Terraform modules, Kubernetes manifests) are stored in a service catalog.

- On-Demand Resource Provisioning – When a developer submits a request, the IDP makes API calls to cloud providers (AWS, GCP, Azure) or internal automation layers (Terraform Cloud, ArgoCD) to create infrastructure.

- Self-Service with Governance – Role-based access control makes sure that only authorized developers can provision resources, and approval workflows can be enforced if needed.

- Security & Governance Gaps – Backstage lacks built-in IAM, RBAC, and credential management, requiring custom authentication and policy enforcement integrations (e.g., OPA, Rego, or Terraform Sentinel)by default.

- Lack of CI/CD Tracking & Observability – It has no native pipeline tracking, requiring additional plugins to fetch logs from GitHub Actions, GitLab CI, or ArgoCD to monitor deployments.

- No Cost Estimation & FinOps Support – Cloud cost tracking isn't built-in, meaning teams must integrate AWS Cost Explorer, GCP Billing API, or Azure Cost Management separately.

- Manual Infrastructure Provisioning – No built-in Terraform or Kubernetes automation, requiring additional configurations and workflow automation.

Deploying an Application Using Cycloid

Cycloid easily eliminates these challenges by offering an all-in-one platform that includes stack-based infrastructure automation, security policies, observability, and cost tracking out-of-the-box. Instead of stitching together different tools, Cycloid provides a simple interface for deploying and managing your cloud infrastructure.Conclusion

So far, we’ve seen how an IDP internal developer platform removes the complexity of delivering software and managing infrastructure. We explored different IDP models and how Cycloid’s stack-based approach allows developers to deploy applications without handling Terraform or cloud configurations manually. With an IDP in place, teams can ship code faster while keeping infrastructure secure and consistent.Frequently Asked Questions

1. What is the Difference Between a Developer Platform and a Developer Portal?

A developer platform provides tools, automation, and infrastructure for building, deploying, and managing applications, while a developer portal is a centralized interface where developers can access tools, documentation, APIs, and internal services.2. What is the Difference Between an Internal Developer Platform and a Devops Platform?

An Internal Developer Platform (IDP) automates infrastructure provisioning and abstracts complexity for developers, while a DevOps platform focuses on CI/CD, collaboration, and automating the software development lifecycle.3. What is an IDP Platform Used for?

An IDP simplifies infrastructure management by automating resource provisioning, enforcing security policies, and providing self-service deployment capabilities for developers.4. What is the Difference Between an Internal Developer Platform and a Service Catalog?

An IDP automates infrastructure and integrates CI/CD workflows, while a service catalog provides a collection of pre-approved services that developers can request and deploy....Imagine you’re a DevOps engineer managing microservices on AWS. How would you configure auto-scaling to handle high traffic without wasting any resources?

Your teammate is setting up an auto-scaling policy using Terraform. The goal is to automatically add more EC2 instances when CPU usage gets too high so the application can handle more API requests during peak traffic.

However, there is a mistake in the Terraform configuration; the CPU utilization threshold is set to 10% instead of a higher, more reasonable value, like 60-70%. Because of this, even small activities like health checks and background tasks trigger auto-scaling. As a result, the system keeps launching new EC2 instances unnecessarily, even when they are not needed.

By the end of the month, the organization is hit with an unexpected bill of $18,000. The mistake was small, but its impact was significant.

This kind of problem is common in organizations where multiple teams manage their own resources independently. The data team, led by a data platform engineer, is responsible for managing large-scale data processing using Amazon EMR and analytics workloads on Amazon Redshift. These engineers ensure that data infrastructure scales efficiently without incurring excessive costs.

The infrastructure team manages networking resources such as VPCs, subnets, and security groups, ensuring connectivity and security across all environments. The application team deploys microservices using Amazon ECS or EKS, running workloads on EC2 instances or Fargate. Meanwhile, the ML team might use Amazon SageMaker with GPU-powered instances for training models.

Without a centralized system to track and manage these resources, misconfigurations, such as over-provisioning, idle instances, or conflicting configurations, can easily go unnoticed. When different teams work separately without a shared system, unexpected cost spikes are more likely.

When cloud costs increase unexpectedly, it indicates inefficiencies in how resources are configured and managed within your organization. Resolving these issues requires more than just cost tracking. It needs an approach where resource provisioning, scaling, and cost management are closely aligned and built into the infrastructure setup.

Platform engineering philosophy is the practice of building and maintaining an internal developer platform that acts as a bridge between developers and the underlying cloud infrastructure. It provides a structured system where cost management, resource provisioning, and scaling are built into daily workflows. This makes sure that resources are used efficiently, scaling is handled correctly, and cloud expenses stay within the organization’s budget.

Platform Engineering vs DevOps

Before diving into the differences, let's first address the question: What is platform engineering? Platform engineering is the practice of building and maintaining an internal developer platform that acts as a bridge between developers and the underlying cloud infrastructure.

Now, scaling your cloud resources efficiently and keeping the cloud costs under control at the same time are some of the major challenges for many organizations. Mismanaged resources, misconfigurations, and the absence of centralized monitoring often lead to some unnecessary expenses. To address such issues, it is important to understand how platform engineering philosophy and DevOps differ in their approach.

Now, here is a comparison table of platform engineering philosophy and DevOps, focusing on their roles in resource management and cost optimization:

Platform engineering is all about building a shared system that simplifies how DevOps or Infrastructure teams manage their infrastructure and control cloud costs. Standardizing processes and enforcing clear rules helps reduce errors and makes sure that resources within your infrastructure are used effectively.

On the other hand, DevOps focuses more on teamwork and flexibility. It improves team collaboration and speeds up the deployments as well, but this flexibility can sometimes lead to inconsistent practices and higher cloud costs.

For organizations struggling with increasing cloud expenses and resource inefficiencies, combining platform engineering with DevOps can strike the right balance. It brings structure to resource management while still allowing teams to work efficiently.

How Does Infrastructure Platform Engineering Philosophy Help in Cost Management?

Now as we know the differences between platform engineering and DevOps, it’s clear that platform engineering takes a more structured approach for managing your cloud infrastructure. This philosophy is particularly effective in addressing cloud cost challenges by integrating cost management directly into resource provisioning, scaling, and governance processes.

Standardizing Resource Provisioning

One of the main reasons for higher cloud costs is allocating resources without proper planning or provisioning instances that are larger than necessary. For example, using an EC2 instance like m5.4xlarge instead of m5.large increases your cloud expenses without matching the actual needs of the application. Similarly, not setting up lifecycle policies for S3 buckets can lead to unused data, such as old logs or temporary files, being stored for long periods, which can also add up to storage costs.

These modules define how resources should be configured, including instance sizes, network configurations, and tags. Since they are created by a central or platform team, they ensure that all considerations, such as performance, cost efficiency, and security are accounted for before provisioning. For example, an EC2 module can guide teams to select an instance size that fits both the application’s needs and the organization’s budget at the same time. It can also include tagging rules to track spending and identify resources that are no longer needed within your infrastructure. By using these modules, teams can avoid over-provisioning and configure their resources correctly.

Automating Scaling

Next, let’s talk about scaling, one of the trickiest parts of managing cloud infrastructure. We’ve all been there; when setting up a new EC2 instance or Kubernetes cluster, there’s always some guesswork involved. To be safe, we often over-provision and end up paying for idle resources, or we under-provision and run into performance issues. When scaling isn’t properly configured, extra instances may be added during high traffic but don’t scale down when demand drops, leading to unnecessary costs.

Platform engineering helps by automating scaling based on real-time usage. Instead of relying on fixed thresholds or manual adjustments, scaling policies automatically adjust resources based on actual workload demands. For example, EC2 instances can be set to scale up when CPU usage goes above 70% and scale down when it drops below 30%. Kubernetes clusters can scale pods based on memory usage or request load, ensuring resources are always in line with demand.

By integrating these automated scaling policies, resources are provisioned only when needed and removed as soon as traffic decreases. This enables self-service capabilities, allowing development teams to deploy applications without worrying about over-provisioning or unexpected costs. Instead of manually adjusting scaling settings, teams can rely on predefined rules set by the platform engineering team, ensuring resources are used efficiently.

Enforcing Policies

Now, let’s look at policy enforcement, which plays an important role in controlling cloud costs. When teams create resources without following standard rules, tracking expenses and optimizing usage becomes much harder. For example, if EC2 instances and S3 buckets are not tagged properly, it becomes difficult to determine which project, cost center, or team owns them. As a result, unused instances may keep running unnoticed, adding to cloud costs without anyone being accountable for them.

Platform engineering philosophy addresses this by enforcing governance policies that define how resources should be created, monitored, and destroyed. These policies require teams to follow some specific rules, such as applying cost allocation tags, setting spending limits, and restricting certain instance types. For example, an organization might enforce a rule where every EC2 instance must have tags like Project: BillingSystem, Owner: DevOpsTeam, and Environment: Production to improve cost monitoring. Another policy could prevent the provisioning of high-cost instances like r5.8xlarge unless explicitly approved.

By integrating these policies into the platform, organizations establish pre-defined rules that everyone in the team must follow. This makes sure that cost control is part of daily operations, prevents unnecessary spending, simplifies audits, and keeps cloud costs within the organization's budget.

Organizations use various platform engineering tools to enforce policies and manage cloud resources efficiently. Tools like Backstage, Crossplane, and Firefly help standardize provisioning, enforce cost controls, and provide self-service capabilities for developers.

Setting Up Monitoring for Cloud Costs (Hands-On)

Now that we have seen how platform engineering philosophy helps control your cloud costs through provisioning, scaling, and policy enforcement, the next step in the list is monitoring your cloud costs. Without proper cost monitoring, unexpected expenses can build up and go unnoticed until they appear in your billing report.

To prevent this, we will set up an automated cost monitoring system using Terraform, AWS Budgets, and AWS Cost Explorer. This setup will help you track your cloud spending, send alerts when costs exceed a defined threshold, and provide insights into which resources contribute the most to the overall bill.

To track costs, we first need to create some AWS resources. We will provision an EC2 instance and an S3 bucket using Terraform. The EC2 instance will be a t2.micro running in us-east-1, and the S3 bucket will be private. These resources will be tagged with relevant metadata such as Name, Environment, and Owner to help track expenses in AWS Cost Explorer. The following Terraform configuration provisions these resources.

provider "aws" {

region = "us-east-1"

}

resource "aws_instance" "monitoring_ec2" {

ami = "ami-0e86e20dae9224db8"

instance_type = "t2.micro"

subnet_id = "subnet-02efa144df0a77c13"

tags = {

Name = "monitoring-ec2"

Environment = "dev"

Owner = "team-a"

}

}

resource "aws_s3_bucket" "monitoring_bucket" {

bucket = "cost-monitoring-bucket-cycloid-93829"

acl = "private"

tags = {

Name = "monitoring-s3-bucket"

Environment = "dev"

Owner = "team-a"

}

}

Once the Terraform configuration is ready, we need to initialize Terraform to download the necessary provider plugins. Running the terraform init command will set up the working directory for Terraform.

At this point, Terraform will initialize the AWS provider, ensuring that all required dependencies are installed.

After initializing Terraform, we should verify what changes will be applied by running the terraform plan command. This helps confirm that Terraform will create the correct resources before actually deploying them.

Once the plan is reviewed, we can proceed with applying the configuration. Running the terraform apply command will create the EC2 instance and S3 bucket as defined in the Terraform configuration.

Now that the EC2 instance and S3 bucket are deployed, the next step is to create a budget to track cloud costs. Here, we will define a monthly budget of $1 and enable cost tracking. This will allow us to detect any unexpected costs early and take action before they increase further. The following Terraform configuration sets up the AWS Budgets resource.

resource "aws_budgets_budget" "monthly_cost_budget" {

name = "monthly-cost-budget"

budget_type = "COST"

limit_amount = "1"

limit_unit = "USD"

time_unit = "MONTHLY"

cost_types {

include_credit = false

include_discount = true

include_other_subscription = true

include_recurring = true

include_tax = true

}

}

Before applying this configuration, we verify it by running the terraform plan command.

Once verified, apply the changes with the terraform apply command to create the budget in AWS. Terraform will now deploy the budget, making it visible in the AWS Budgets console.

Now, having a budget in place is useful, but we also need a way to get notified when spending reaches a certain threshold. Instead of checking the budget through the AWS console, we can set up an email alert that will notify us when expenses exceed 80% of the budget. This makes sure that any unexpected charges are detected early. The following Terraform configuration creates an AWS Budget Action that sends an email alert when spending goes above 80% of the budget.

resource "aws_budgets_budget_action" "email_alert" {

budget_name = aws_budgets_budget.monthly_cost_budget.name

action_type = "NOTIFICATION"

notification {

notification_type = "ACTUAL"

comparison_operator = "GREATER_THAN"

threshold = 80.0

threshold_type = "PERCENTAGE"

subscriber {

subscription_type = "EMAIL"

address = "saksham@infrasity.com"

}

}

}

Run the terraform apply command to apply the changes and activate the budget alert.

Once the budget is created, AWS will start tracking costs, and if the spending exceeds 80% of the defined limit, an email notification will be sent to the configured address. Below is an example of an AWS Budgets alert showing that the actual cost has exceeded the set threshold.

With AWS Budgets and alerts in place, cost tracking becomes automated, making sure that responsible teams are notified when spending exceeds the set limit. Without this setup, unexpected charges could easily go unnoticed until the billing cycle ends.

Best Practices for Sustainable Cloud Cost Management

Now that we have a system in place to monitor our cloud costs, the next step is making sure that cloud costs remain optimized over time as well. Just having budgets and alerts is not enough; organizations need to follow some best practices to prevent unnecessary spending and improve long-term cost efficiency.

Conduct Regular Cost Audits

Cloud environments scale over time as teams provision new resources. Without regular audits, unused or oversized instances, idle storage, and misconfigured services can lead to unnecessary cloud costs. Reviewing cloud spending every month or week helps identify such resources. For example, an audit might reveal EC2 instances running 24/7 when they are only needed during business hours. Switching such instances to a scheduled start/stop policy or using spot instances can significantly reduce expenses.

Enforce Strict Resource Tagging Policies

When multiple teams work in the same cloud environment, tracking which resources belong to which project becomes difficult. Without proper resource tagging, identifying and decommissioning unused resources becomes difficult. Enforcing a tagging policy that includes attributes like Project, Environment, and Owner ensures clear cost allocation. Using Terraform modules that require tags at the time of provisioning helps enforce this consistently across the organization.

Implement Cost Alerts and Budgets

Setting up cost alerts prevents unexpected charges by notifying teams when spending exceeds predefined limits. AWS Budgets, as configured earlier, help track total cloud costs, but organizations can extend this to monitor specific services like RDS, Lambda, and EBS snapshots. Alerts can also detect sudden spikes in usage, helping teams investigate and address cost irregularities before they become a significant issue.

By following these best practices, organizations can maintain better control over their cloud expenses. Conducting regular audits, enforcing tagging policies, and setting up cost alerts ensure cloud costs remain predictable and aligned with business needs. When combined with platform engineering principles, these measures create a sustainable approach to managing cloud costs at scale.

Now, in the earlier hands-on setup, we created an AWS budget to track cloud costs, but there were some limitations to it as well. The budget only starts tracking after the resources are deployed, and alerts are triggered only after the threshold is exceeded. This means there is no way to see the cost impact before provisioning a resource. If a large instance type like m5.4xlarge is selected instead of a smaller one, there is no immediate breakdown to show how much the cost will increase. Without visibility into expected costs upfront, most of the teams risk deploying oversized resources and only realizing the impact when the budget alert is triggered.

For more ways to reduce cloud costs, check out this blog on Cloud Cost Optimization.

Using Cycloid to Estimate and Manage Cloud Costs

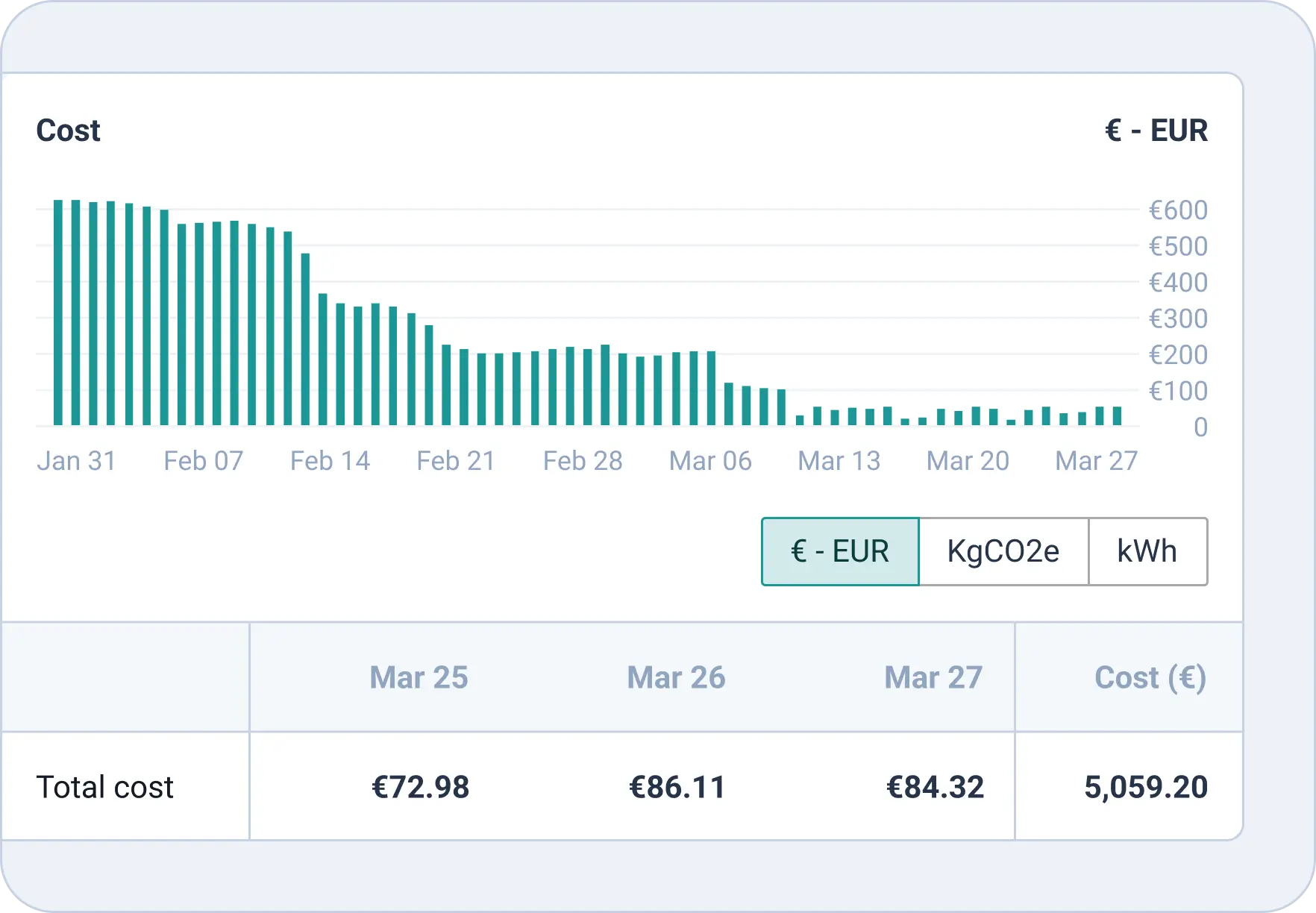

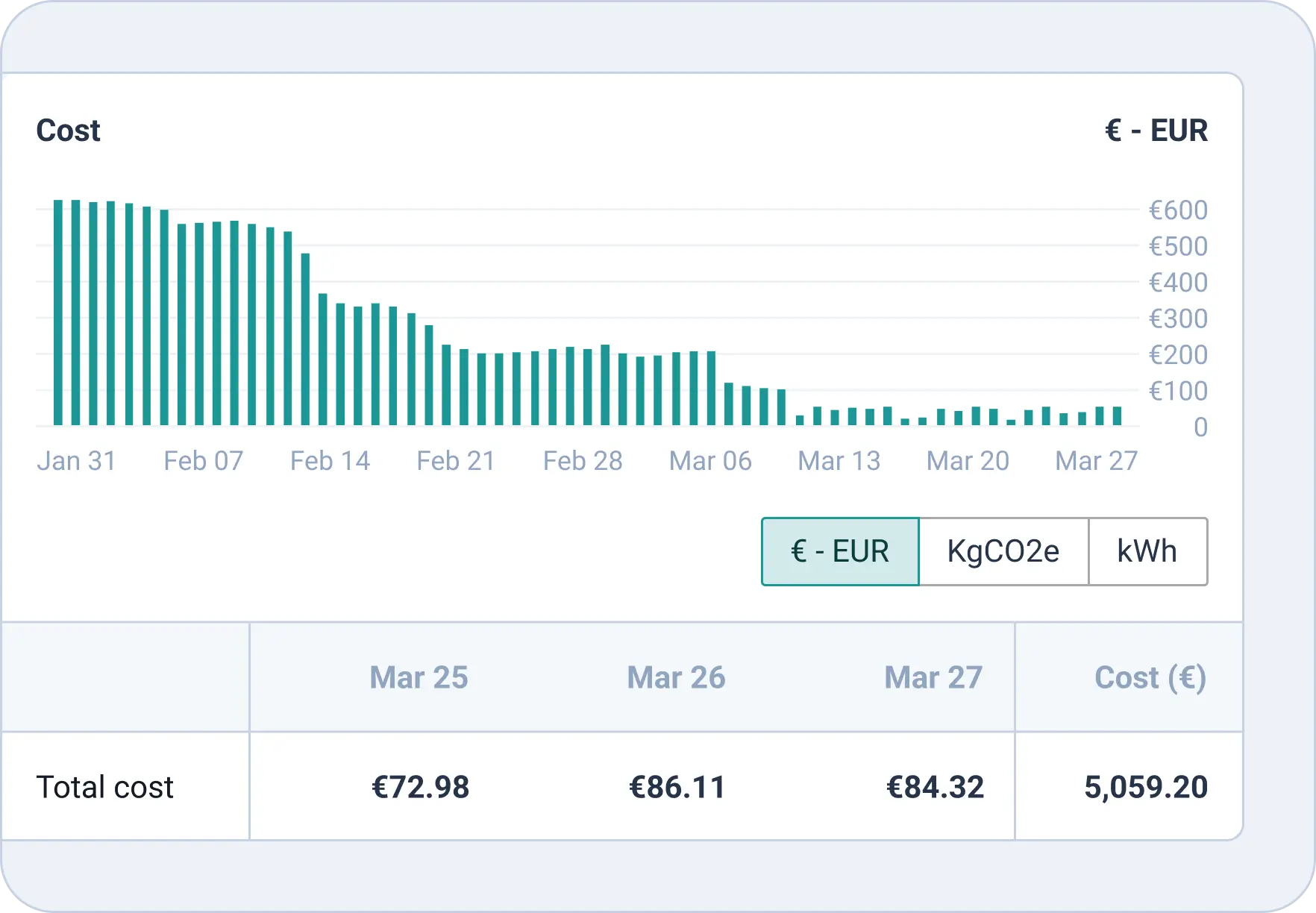

This is where Cycloid simplifies cloud cost management by integrating cost estimation directly into the resource provisioning workflow. Instead of manually setting budgets, navigating Cost Explorer, and provisioning resources through Terraform, Cycloid provides everything in one place.

Before Cycloid, teams had to set up cost controls manually, define budgets, configure alerts, and provision resources from scratch with a lot of guesswork. It was easy to over-provision, miss cost spikes, or struggle with fragmented tracking across multiple tools. What if there was a centralized platform that handled all of this automatically? Cycloid simplifies cloud cost management by integrating cost estimation directly into the resource provisioning workflow. Instead of manually setting budgets, navigating Cost Explorer, and provisioning resources through Terraform, Cycloid provides everything in one place, ensuring that cost visibility and control are built into the process from the start.

Cycloid centralizes infrastructure management, allowing teams to organize deployments under structured projects and environments.

Once inside a project, Cycloid allows users to define cloud configurations without writing Terraform configurations. Teams can specify the AWS account, region, VPC ID, SSH key, and instance type through a UI-driven approach, ensuring consistency across deployments.

What sets Cycloid apart is its side-by-side cost estimation during resource creation. Unlike AWS Budgets, which tracks expenses after deployment, Cycloid provides real-time cost visibility before provisioning. This helps teams adjust configurations early to prevent unnecessary expenses.

The platform team defines infrastructure stacks in Cycloid, ensuring consistency across environments. These configurations are stored in Cycloid’s GitHub private repository and can be deployed using predefined modules via the UI.

Cycloid’s stack pipeline runs Terraform with built-in governance, making sure that infrastructure deployments follow predefined policies. This means that teams don’t have to manually enforce security rules, cost limits, or instance configurations; everything is checked automatically before provisioning. The pipeline applies standard practices for instance sizing, network configurations, and tagging, reducing misconfigurations and ensuring compliance across all the environments.

Developers can use Cycloid’s pipeline-driven workflow to deploy infrastructure directly without writing Terraform configurations. The UI provides cost estimates before deployment, and teams can track spending across projects in Cycloid’s dashboard.

Cycloid also offers additional features like cloud carbon footprint tracking and deeper cost visibility to help organizations maintain sustainable and optimized cloud spending.

Once the configurations are set, Cycloid automatically runs a pipeline to deploy the infrastructure. It pulls the Terraform stack, executes a terraform plan step to generate an execution plan, and then applies the changes to provision resources.

The pipeline ensures deployments follow a structured process without requiring manual intervention. It also manages Terraform state to keep track of existing resources and prevent conflicts. This automated workflow makes infrastructure provisioning more predictable while keeping costs in check as well.

With real-time cost estimation, teams can compare instance types or adjust configurations before deployment to balance cost and performance. Once the resource configuration is finalized, it can be deployed directly from Cycloid, making sure that infrastructure changes are cost-optimized before affecting the cloud environment.

By integrating cost estimation with provisioning and ongoing cost tracking, Cycloid makes sure that cloud cost management is proactive rather than reactive. Instead of relying on post-deployment budgets and alerts, teams can optimize cloud spending at the time of provisioning, ensuring predictable and well-managed cloud costs.

Do you want to take control of your cloud costs before deploying to the infrastructure? Try Cycloid now and make every resource count.

Conclusion

Till now, you should have a clear understanding of how misconfigurations and unmanaged resources lead to high cloud costs. We covered how platform engineering, cost monitoring, and Cycloid help control expenses. By following these practices, teams can optimize cloud spending and avoid unexpected charges.

Frequently Asked Questions

-

How do you control cloud costs?

Cloud costs can be controlled by right-sizing resources, setting budgets and alerts, automating scaling, and regularly reviewing usage to eliminate waste. Using Infrastructure as Code (IaC) helps standardize provisioning and prevent over-allocation.

- How can you optimize costs with cloud computing?

Optimizing cloud costs involves using auto-scaling, spot instances, serverless computing, and reserved instances where applicable. Regular audits, enforcing tagging policies, and tracking expenses with cost-monitoring tools also help. -

How to save cost on cloud?

To save cloud costs, avoid idle resources, use cost-effective storage options, and leverage discounts like reserved and spot instances. Implement automation to scale resources only when needed.

-

How to control cost in Azure?

Azure costs can be controlled by setting up Azure Cost Management, enabling budgets and alerts, using reserved instances, and optimizing virtual machines and storage based on usage patterns.

-

What is Azure Cost Management tool?

Azure Cost Management is a built-in tool that helps track, analyze, and optimize cloud spending in Azure. It provides reports, cost alerts, and recommendations to manage expenses effectively.

Si vous ne vivez pas sous un rocher depuis un an et demi, il y a de fortes chances que vous ayez entendu parler de l'ingénierie des plates-formes. l'ingénierie des plates-formes. La dernière tendance du secteur promet de faire tout ce que DevOps a essayé de faire et a échoué, une fois de plus : alléger la charge de travail de vos développeurs, améliorer DevX, faire monter en flèche l'efficacité opérationnelle et transformer vos projets en rivières d'or.

En réalité, l'ingénierie de plateforme poursuit le processus entamé par DevOps. Avec l'ingénierie de plateforme, les dirigeants disposent d'un plan d'action pour construire des chaînes d'outils et des flux de travail qui permettent aux développeurs de tout niveau de compétence de disposer de capacités en libre-service. Il s'agit d'une solution complète qui améliore non seulement l'autonomie et la collaboration des développeurs, mais aussi la gestion des ressources et l'intégration de l'écosystème et des modules d'extension.

Vous comprenez pourquoi il s'agit d'une proposition extrêmement attrayante pour les services informatiques - et d'une entreprise très intimidante. Après tout, il faut près de 3 ans et 20 spécialistes dévoués pour commencer à obtenir des résultats tangibles. Et pourtant, Gartner prévoit que d'ici 2026, 80 % des services informatiques adopteront des équipes internes d'ingénierie des plateformes.

Il est évident que vous ne voulez pas être à la traîne, mais cela signifie-t-il que vous devez vous lancer dans la course dès maintenant ? Quand devriez-vous adopter l'ingénierie de plateforme ?

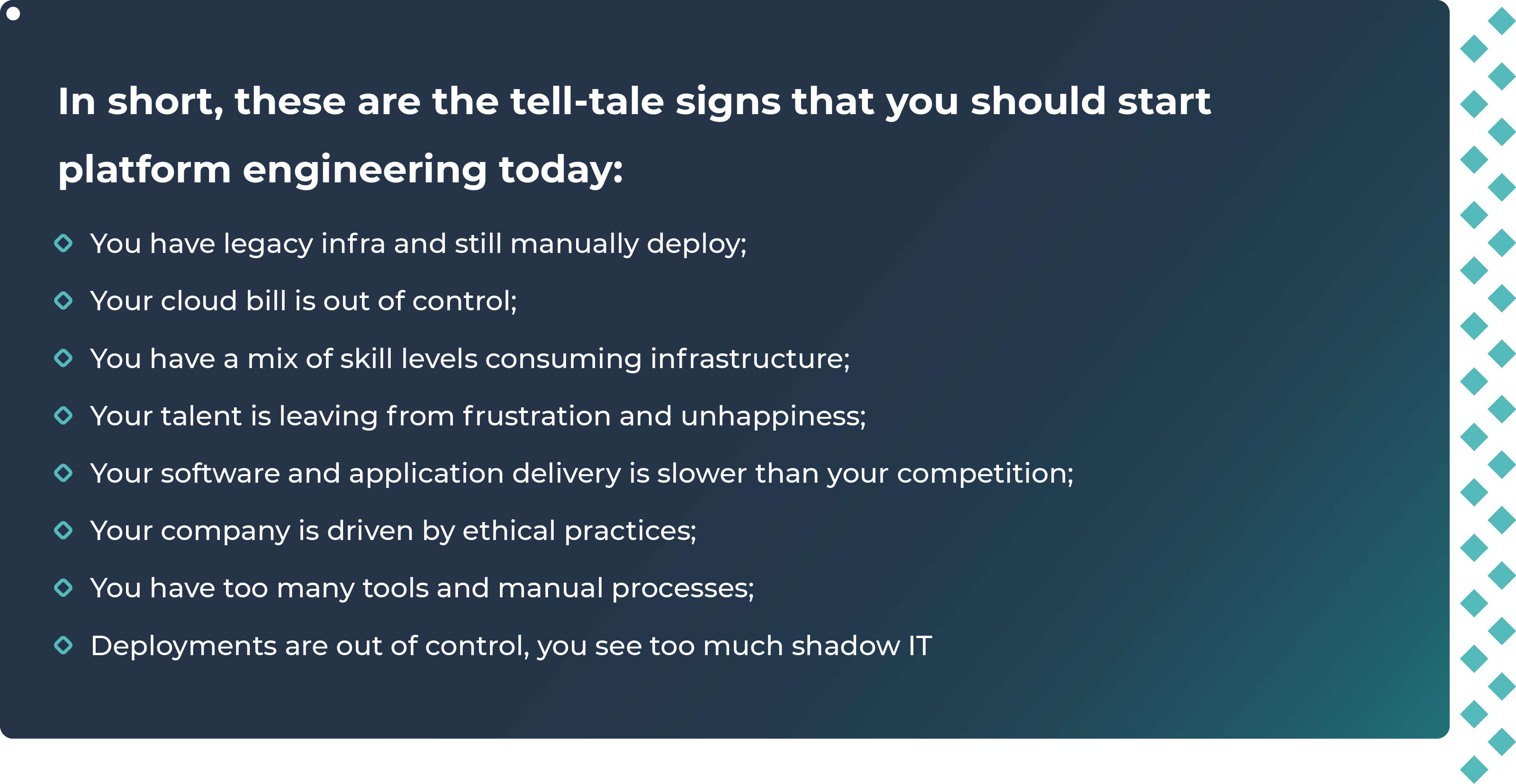

Examinons les facteurs qui font que votre organisation doit envisager sérieusement l'ingénierie de plateforme.{{cta('165511729744','justifycenter')}}

Quelle est l'urgence ?

2026 est plus proche que vous ne le pensez, et l'industrie se prépare à protéger ses opérations contre les problèmes d'efficacité, de durabilité et de talents. Trois raisons principales expliquent pourquoi les organisations tournées vers l'avenir s'orientent vers une plateforme d'ingénierie interne maintenant, et pas demain.

- Conserver les meilleurs talents

Les grands talents - DevOps, ingénieurs logiciels et de plateforme, architectes de solutions - veulent innover et résoudre les problèmes avec des technologies de pointe, au lieu de perdre du temps à dépanner les systèmes existants. L'ingénierie de plateforme rationalise les tâches complexes et résume les rouages des outils de développement en une belle interface utilisateur conviviale. Les entreprises qui adoptent des stratégies d'ingénierie de plateforme ont tendance à attirer et à conserver les meilleurs talents. Si vous tardez à le faire, vous risquez de les perdre au profit de la concurrence.

- Efficacité maximale

Une plateforme d'ingénierie s'occupe du travail ennuyeux que personne ne veut faire et minimise l'erreur humaine dans les tâches qui peuvent être automatisées. Cela vous permet de vous débarrasser d'une dette technique inutile et d'aller de l'avant de manière plus légère, moins lourde et plus rapide, en donnant à votre équipe brillante les moyens de faire son meilleur travail. Nous aimons considérer la fusion entre votre personnel et l'ingénierie de plateforme comme un moteur de distorsion, aidant votre organisation à aller audacieusement là où aucun homme n'est allé auparavant.

- Économiser les ressources

La planète ne peut pas attendre ! Les technologies de l'information jouent un rôle considérable dans notre impact global sur l'environnement - vous n'avez pas compris ? Les émissions de carbone liées à l'utilisation de l'informatique en nuage à l'échelle mondiale ont dépassé celles de l'aviation commerciale en 2023. Sans compter qu'il s'agit d'une ponction énorme sur les ressources propres de votre organisation. L'ingénierie de plateforme vous aide à garder un œil sur votre utilisation du cloud, en réduisant les ressources inutilisées et en évitant les dépassements de budget si nécessaire. Cela signifie une charge plus légère sur Terre et des économies de coûts en prime !

Quand devriez-vous adopter l'ingénierie de plateforme ?

Réponse courte : cela dépend. Réponse longue : cela dépend de l'ampleur de vos projets.

Lorsque votre organisation ou votre projet atteint un certain niveau de complexité, il est inévitable qu'une plateforme dédiée soit nécessaire pour prendre en charge vos applications, services ou produits. Voici quelques facteurs à prendre en compte pour déterminer s'il est temps d'investir dans l'ingénierie des plateformes :

- Défis d'échelle :

Vous éprouvez des difficultés à faire évoluer vos applications ou vos services pour répondre à une demande croissante ? C'est peut-être le signe que vous avez besoin d'une plateforme capable de fournir l'infrastructure et les outils nécessaires pour soutenir l'évolutivité. Une plateforme d'ingénierie peut vous aider à passer à la vitesse supérieure lorsque vous en avez besoin.

- Tâches répétitives :

Voici ce qu'aucun développeur ou DevOps ne veut faire : résoudre de manière répétée les 3 mêmes problèmes ou implémenter les 3 mêmes fonctionnalités à travers plusieurs projets. C'est un énorme gaspillage non seulement de la créativité de votre équipe, mais aussi des ressources de votre organisation. Une plateforme peut aider à standardiser ces processus et à améliorer l'efficacité, en éliminant les tâches répétitives et les erreurs humaines.

- Diverses charges de travail :