Your unified Internal

Developer Portal & Platform

Sovereign by design, secure by nature, made to scale platform engineering initiatives.

Sovereign by design, secure by nature, made to scale platform engineering initiatives.

The proof is in our clients and partners

The proof is in our clients and partners

Enable governance, efficiency, and golden paths for your teams

In software development and infrastructure management, you need to move fast and deploy while maintaining reliability, security, and control. Cycloid’s GitOps first approach, backed by DevOps best practices, reduces bottlenecks with its Internal Developer Portal and Platform in one.

The best part? You’ll be up and running this quarter, not next year.

With Cycloid's customizable end to end solution, you'll see the potential of platform engineering for your organization in weeks, not years. Start by managing your service catalog directly from your Git repository. Cycloid takes a full GitOps approach with your git the source of truth. Create IAC from your existing infrastructure or create a stack from a predefined blueprint. Push this stack to your Git catalog repository. Customize your user experience with our stack forms editor. Use drop down menus to select a cloud account to auto complete widgets that fetch options from APIs. Drop down options adapt based on previous selections. Conditionally display advanced fields to streamline user interactions with Cycloid asset inventory widget. Multi tenancy capabilities let service catalogs be shared with multiple orgs, enforce multi factor authentication to meet security rules, configure Terraform back end, maintain brand consistency, implement RBAC for governance. It's easy for end users to deploy infra and apps. Select a stack from your service catalog. Optionally, select your preferred cloud provider. Configure your deployment as needed according to the guardrails as defined by your platform team. Use the cycloid asset inventory widget to integrate cloud resources with each other. Estimate infrastructure costs before deployment for budget compliance. End user configurations are securely saved back to your gift. Here, Cycloid serves as an internal developer portal and platform. Cycloid's asset inventory maintains visibility into deployed infra resources. The FinOps module provides cost analysis and opportunities for optimization. Cycloid helps you monitor and reduce carbon emissions, reinforcing your sustainability goals. Cycloid, it's platform engineering done right.

Secure better platform adoption

If you build an Internal Developer Portal, developers will come – unless what’s been built isn’t fit for their purpose. Cycloid’s different. Our approach lets Platform teams automate and optimize hybrid and multi-cloud environments. End-users can interact with cutting-edge DevOps and Cloud automation without years of experience, managed by best practices. Executives gain accelerated time-to-value, streamlined resource allocation, measurable outcomes, and an improved developer experience. See how Cycloid works with your teams, not against them.

Real impact, measured [and rated]

Let anyone interact with tools, cloud, and automation without being an expert. This means your people can focus on value-added tasks, faster.

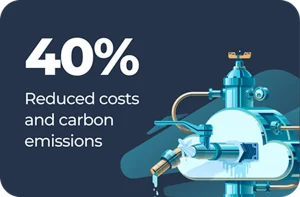

Cut cloud spending and carbon footprint with observability and governance tools. Don’t be wasteful – more than best practice, it’s business sense.

Cycloid named in Gartner® Hype Cycle 2025 for Platform

Engineering and Site Reliability Engineering.

Let anyone interact with tools, cloud, and automation without being an expert. This means your people can focus on value-added tasks, faster.

Cut cloud spending and carbon footprint with observability and governance tools. Don’t be wasteful – more than best practice, it’s business sense.

Scale your platform engineering initiatives

So, what are the key benefits?

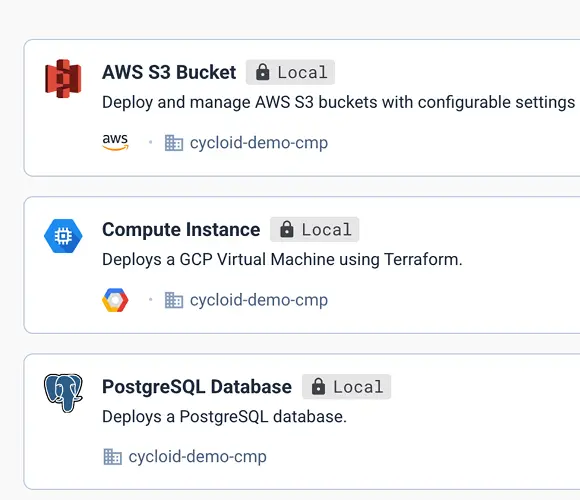

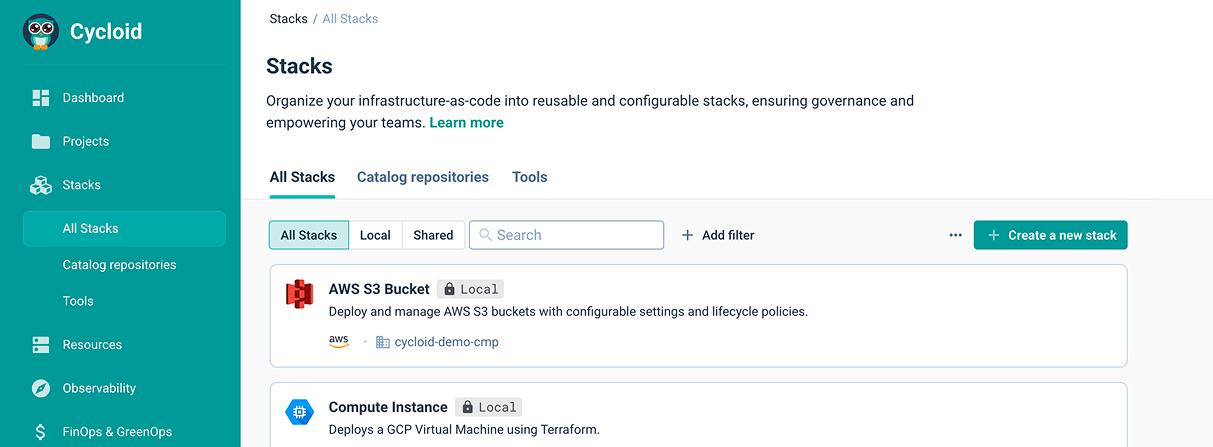

1. Build your Service Catalog

Landing zone, infrastructures, applications, display anything you want in your service catalog to bring self-service portal to developers while keeping best practices, security and automation in place.

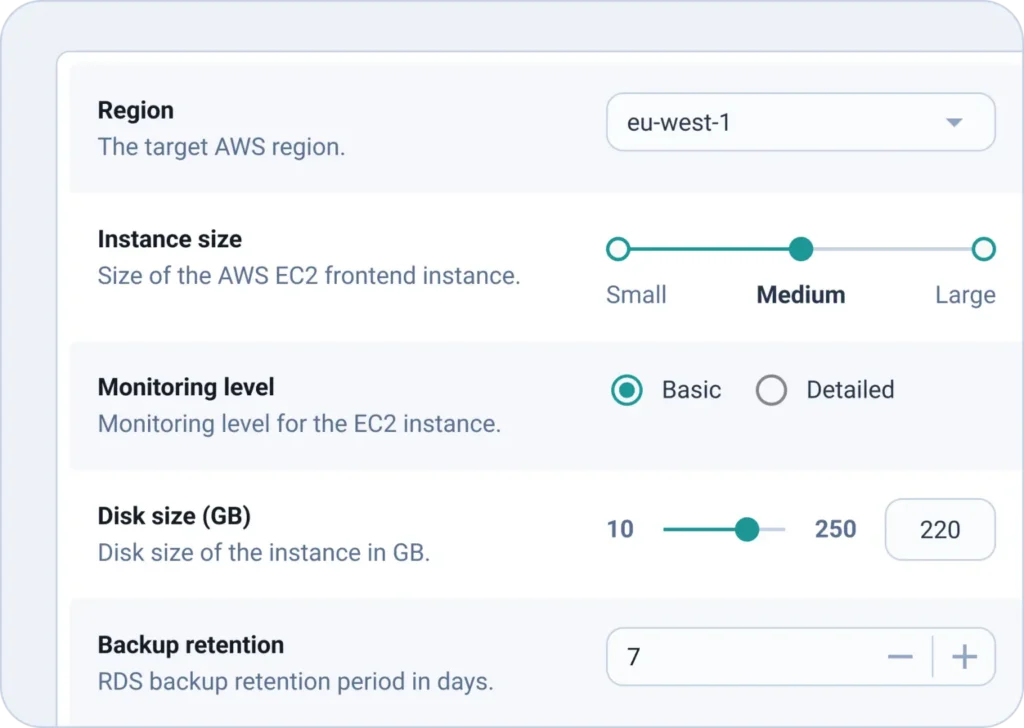

2. Secure your Self-Service Portal Adoption with Forms

Bring flexibility to your service catalog with forms designed by your platform teams. It allows anyone to interact with tools, cloud and automation without having to become a DevOps expert.

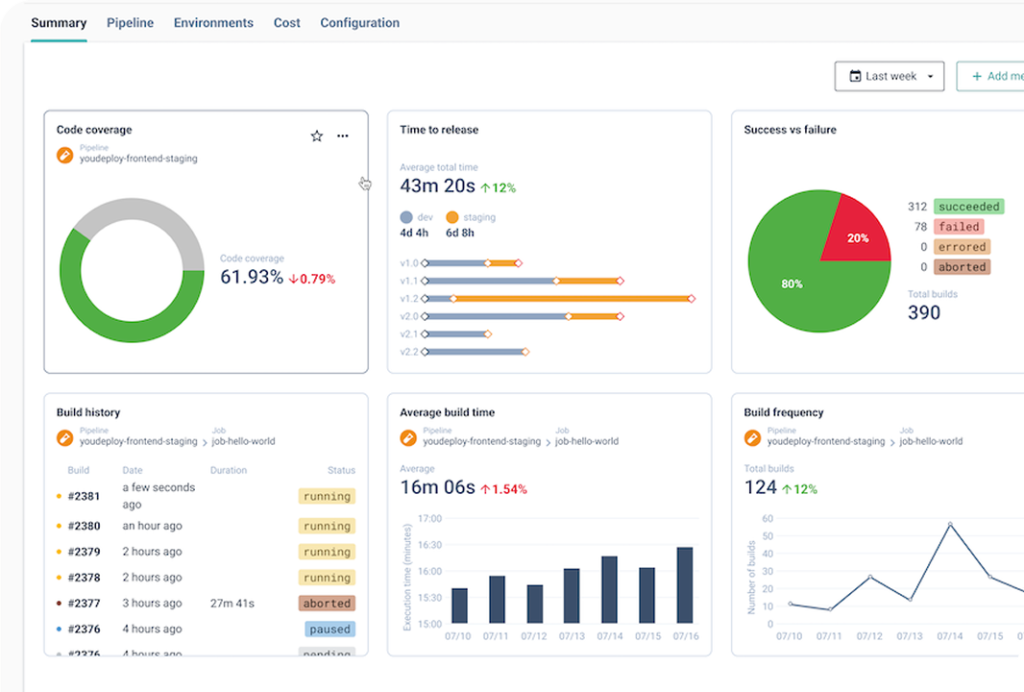

3. Centralize Governance and Observability

Bring centralized visibility of your ecosystem to foster best practices: RBAC, approvals, security, MFA, SSO, infrastructure diagram, dashboards, asset inventory, KPI, logs, documentation, plugins etc.

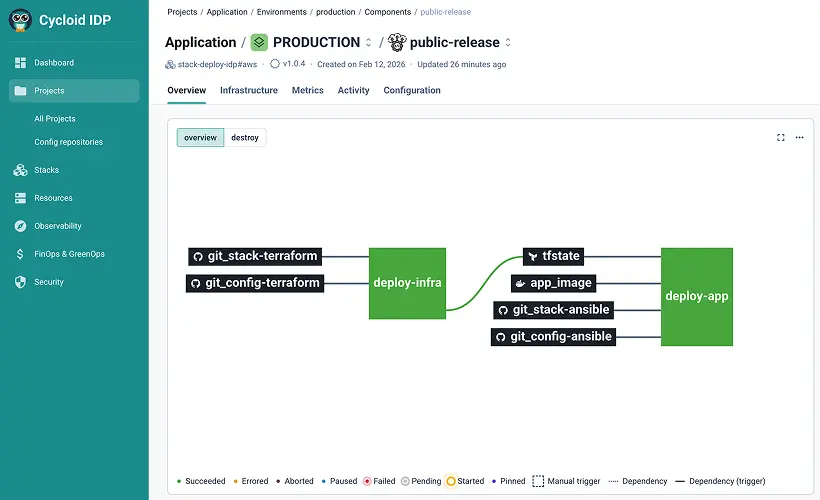

4. Build your Platform Orchestration

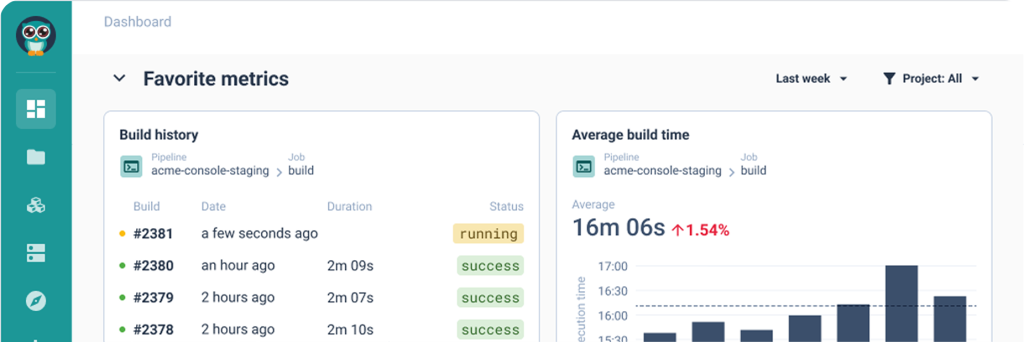

Create custom workflows to simplify the deployment processes for end-users. This platform orchestration integrates seamlessly your tools and API and is connected to your continuous integration tool.

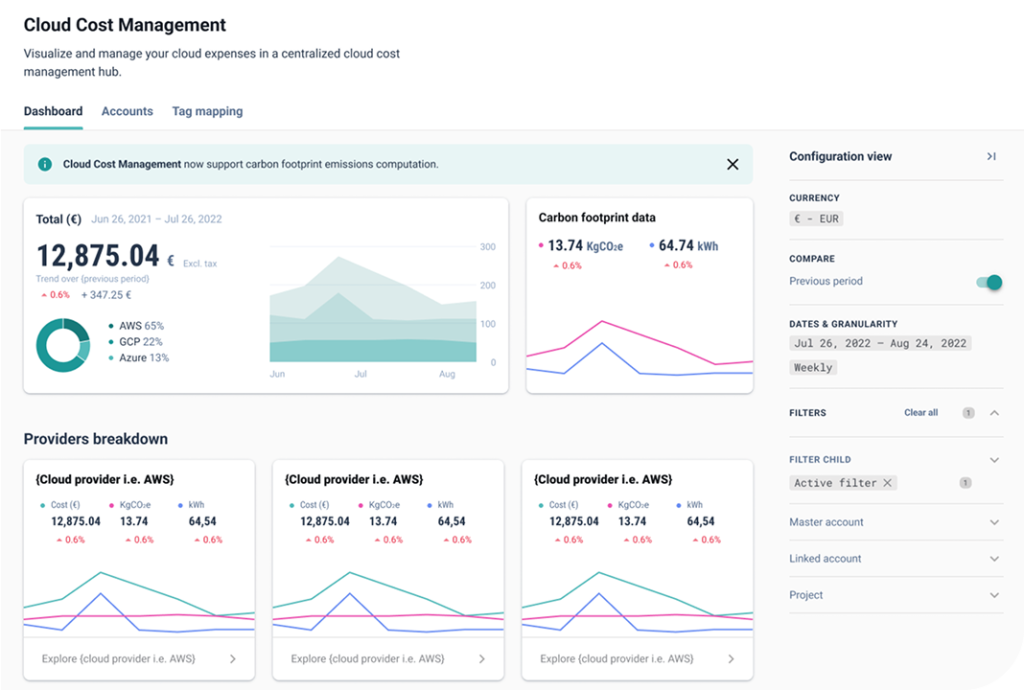

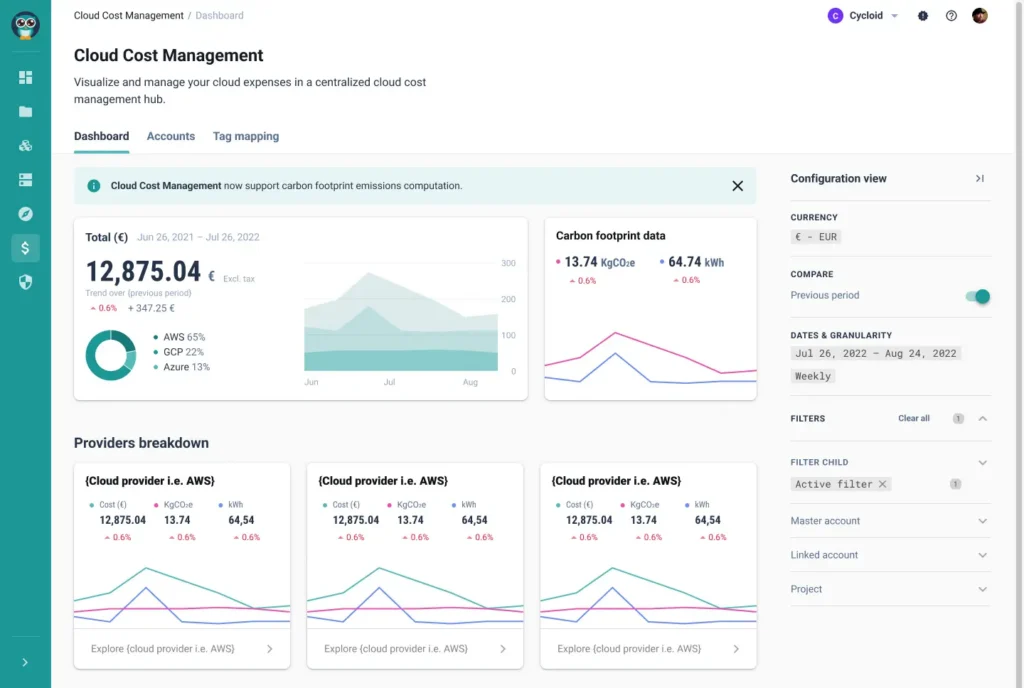

5. Drive FinOps & GreenOps Practices

From cost estimation before a deployment, visualization of prices and carbon footprint in the asset inventory, to cloud cost management and carbon footprint in a multi-cloud environment, get 80% of features needed and if you want more, just plug your FinOps premium software or develop your custom plugins.

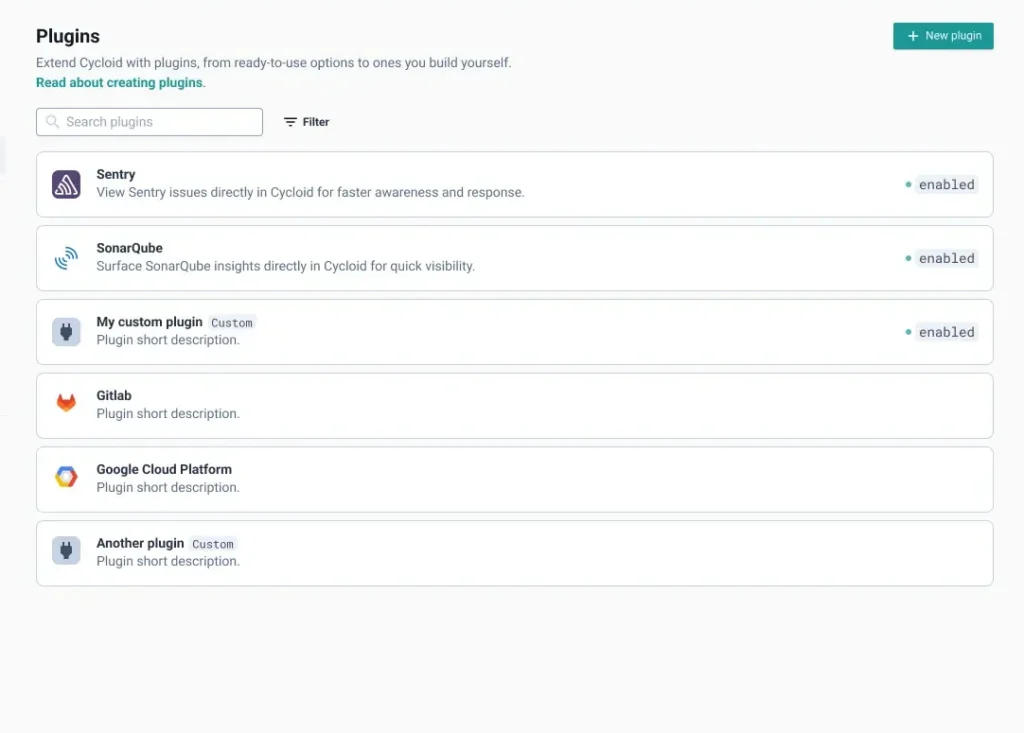

6. Customize your Portal with Plug-ins

Transform Cycloid into a centralized developer platform and portal hub with official or custom plugins, enriched with external tool data.

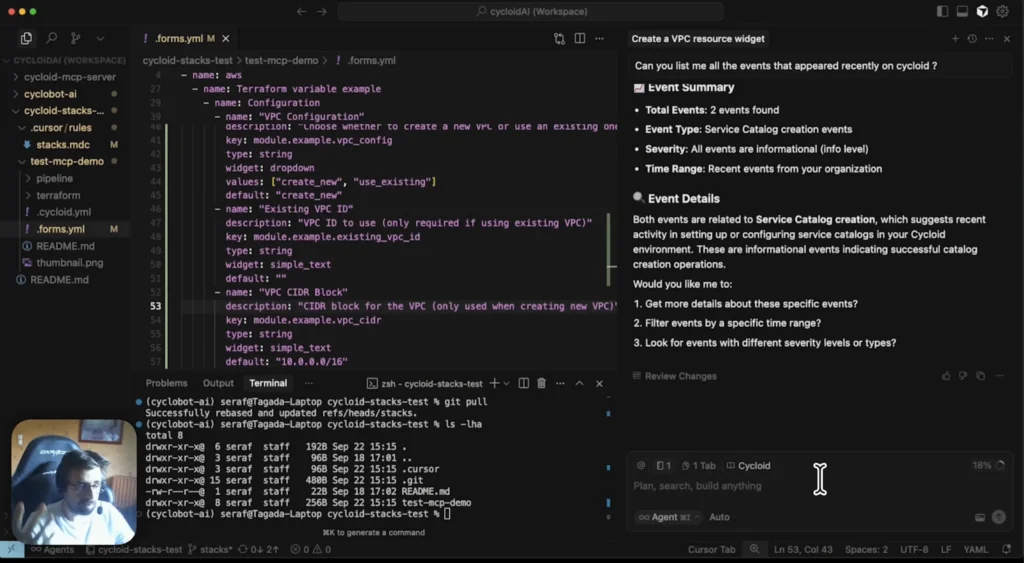

7. Accelerate Platform Adoption with AI

Meet Cycloid’s MCP server, a tool that eases the journey of users on onboarding, configuration, centralization, usage, and call to actions.

Loved by teams

Pierre-Emmanuel Klotz

“Cycloid acts as a cornerstone with these tools, bringing something that we didn’t know before, something that we hadn’t already mastered before.”

Pierre-Emmanuel Klotz

Discover Cycloid now

manage your cloud costs and carbon footprint with your unify internal developer portal and platform.